Test 4: regional gravity field¶

import packages

[1]:

%load_ext autoreload

%autoreload 2

import copy

import logging

import os

import pathlib

import pickle

import string

import numpy as np

import pandas as pd

import scipy as sp

import verde as vd

import xarray as xr

from invert4geom import inversion, optimization, plotting, regional, uncertainty, utils

from polartoolkit import maps, profiles

from polartoolkit import utils as polar_utils

import synthetic_bathymetry_inversion.plotting as synth_plotting

from synthetic_bathymetry_inversion import synthetic

os.environ["POLARTOOLKIT_HEMISPHERE"] = "south"

logging.getLogger().setLevel(logging.INFO)

/home/sungw937/miniforge3/envs/synthetic_bathymetry_inversion/lib/python3.12/site-packages/UQpy/__init__.py:6: UserWarning:

pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html. The pkg_resources package is slated for removal as early as 2025-11-30. Refrain from using this package or pin to Setuptools<81.

[2]:

fpath = "../results/Ross_Sea/Ross_Sea_04"

Get synthetic model data¶

[3]:

# set grid parameters

spacing = 2e3

inversion_region = (-40e3, 110e3, -1600e3, -1400e3)

true_density_contrast = 1476

bathymetry, basement, grav_df = synthetic.load_synthetic_model(

spacing=spacing,

inversion_region=inversion_region,

buffer=spacing * 10,

basement=True,

zref=0,

bathymetry_density_contrast=true_density_contrast,

)

buffer_region = polar_utils.get_grid_info(bathymetry)[1]

requested spacing (2000.0) is smaller than the original (5000.0).

requested spacing (2000.0) is smaller than the original (5000.0).

[4]:

# normalize regional gravity between -1 and 1

grav_df["basement_grav_normalized"] = (

vd.grid_to_table(

utils.normalize_xarray(

grav_df.set_index(["northing", "easting"]).to_xarray().basement_grav,

low=-1,

high=1,

)

)

.reset_index()

.basement_grav

)

grav_df = grav_df.drop(columns=["basement_grav", "disturbance", "gravity_anomaly"])

[5]:

# re-scale the regional gravity

regional_grav = utils.normalize_xarray(

grav_df.set_index(["northing", "easting"]).to_xarray().basement_grav_normalized,

low=0,

high=90,

).rename("basement_grav")

regional_grav -= regional_grav.mean()

# add to dataframe

grav_df["basement_grav"] = vd.grid_to_table(regional_grav).reset_index().basement_grav

# add basement and bathymetry forward gravities together to make observed gravity

grav_df["gravity_anomaly"] = grav_df.bathymetry_grav + grav_df.basement_grav

new_reg = grav_df.set_index(["northing", "easting"]).to_xarray().basement_grav

new_reg -= new_reg.mean()

print(utils.rmse(new_reg))

18.399763167862545

[6]:

grav_df.basement_grav.std()

[6]:

np.float64(18.400961810459684)

[7]:

grav_df.bathymetry_grav.std()

[7]:

np.float64(6.458148145703343)

[8]:

grav_df.gravity_anomaly.std()

[8]:

np.float64(18.70563477739872)

[9]:

grav_df.gravity_anomaly.std() / grav_df.basement_grav.std()

[9]:

np.float64(1.016557447924589)

[10]:

grav_df

[10]:

| northing | easting | upward | bathymetry_grav | basement_grav_normalized | basement_grav | gravity_anomaly | |

|---|---|---|---|---|---|---|---|

| 0 | -1600000.0 | -40000.0 | 1000.0 | -35.551054 | -0.575610 | -36.298772 | -71.849826 |

| 1 | -1600000.0 | -38000.0 | 1000.0 | -36.054657 | -0.523194 | -33.940048 | -69.994704 |

| 2 | -1600000.0 | -36000.0 | 1000.0 | -36.473146 | -0.466341 | -31.381660 | -67.854806 |

| 3 | -1600000.0 | -34000.0 | 1000.0 | -36.755608 | -0.406001 | -28.666395 | -65.422003 |

| 4 | -1600000.0 | -32000.0 | 1000.0 | -36.951029 | -0.343146 | -25.837899 | -62.788928 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 7671 | -1400000.0 | 102000.0 | 1000.0 | -25.760090 | 0.399165 | 7.566093 | -18.193997 |

| 7672 | -1400000.0 | 104000.0 | 1000.0 | -25.911429 | 0.334795 | 4.669468 | -21.241961 |

| 7673 | -1400000.0 | 106000.0 | 1000.0 | -26.032814 | 0.268739 | 1.696911 | -24.335902 |

| 7674 | -1400000.0 | 108000.0 | 1000.0 | -26.121903 | 0.201713 | -1.319231 | -27.441134 |

| 7675 | -1400000.0 | 110000.0 | 1000.0 | -26.206160 | 0.134416 | -4.347629 | -30.553790 |

7676 rows × 7 columns

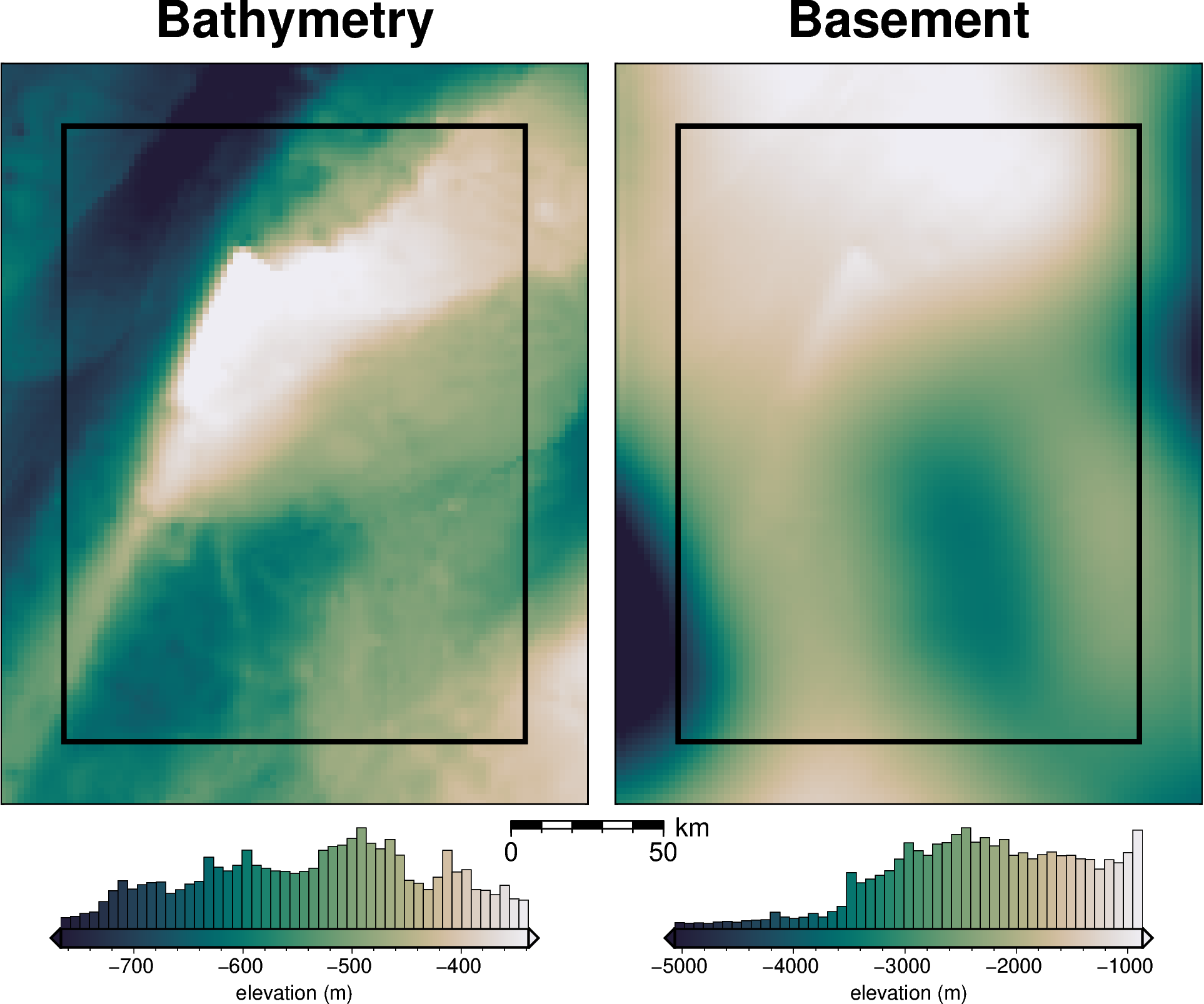

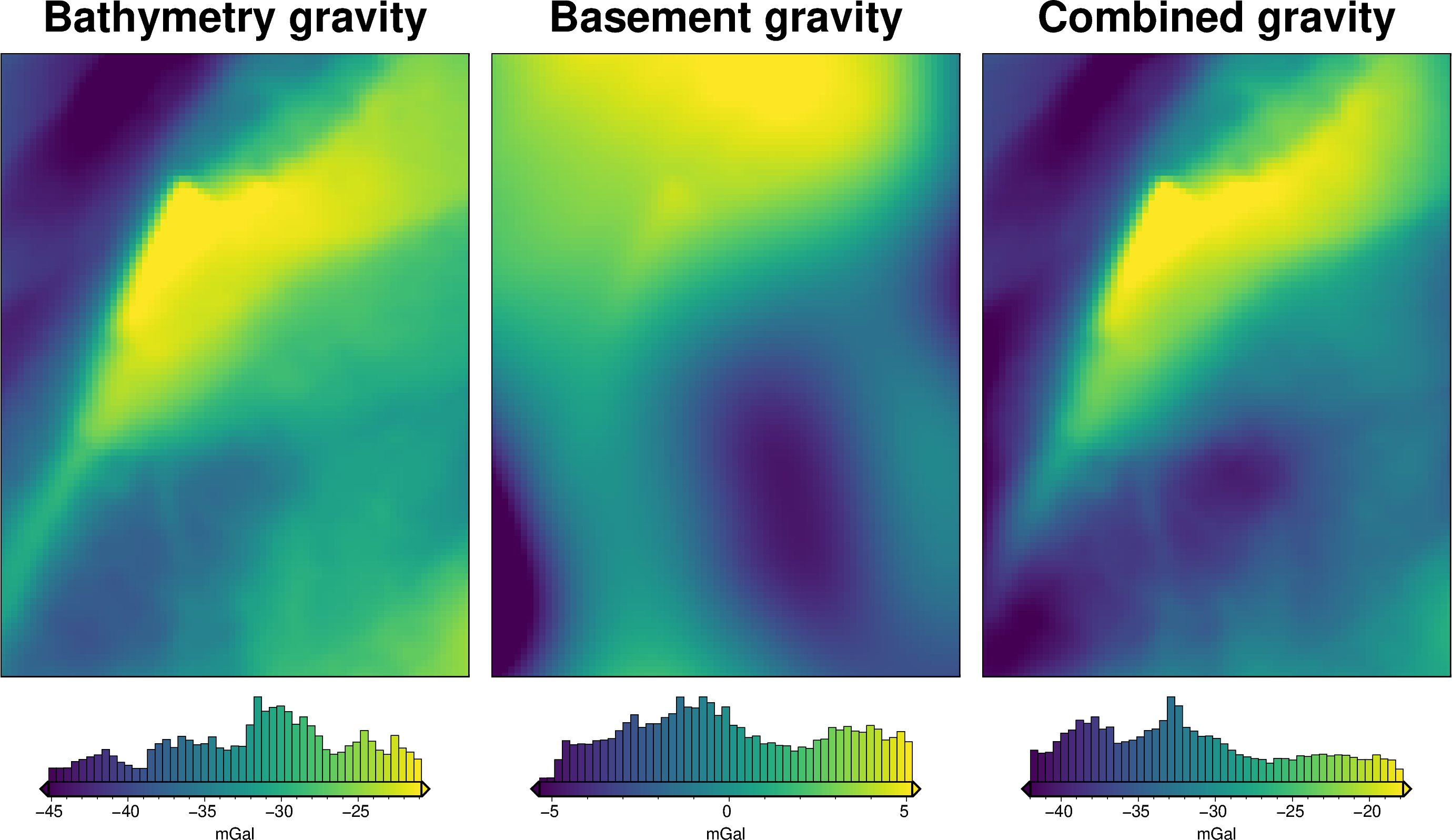

[11]:

grav_grid = grav_df.set_index(["northing", "easting"]).to_xarray()

fig = maps.plot_grd(

bathymetry,

region=inversion_region,

fig_height=10,

title="Bathymetry",

hist=True,

cmap="rain",

reverse_cpt=True,

cbar_label="elevation (m)",

robust=True,

)

fig.text(

position="TL",

text="a",

fill="white",

pen=True,

font="16p,Helvetica,black",

offset="j.2/.2",

clearance="+tO",

no_clip=True,

)

fig = maps.plot_grd(

basement,

region=inversion_region,

fig_height=10,

title="Basement",

hist=True,

cmap="rain",

reverse_cpt=True,

cbar_label="elevation (m)",

fig=fig,

origin_shift="xshift",

robust=True,

scalebar=True,

scalebar_position="n-.05/-.03",

)

fig.text(

position="TL",

text="b",

fill="white",

pen=True,

font="16p,Helvetica,black",

offset="j.2/.2",

clearance="+tO",

no_clip=True,

)

# shift down and 3 to the left

fig = maps.plot_grd(

grav_grid.bathymetry_grav,

region=inversion_region,

fig_height=10,

title="Bathymetry gravity",

hist=True,

cbar_label="mGal",

fig=fig,

origin_shift="both",

xshift_amount=-1,

yshift_amount=-1,

robust=True,

)

fig.text(

position="TL",

text="c",

fill="white",

pen=True,

font="16p,Helvetica,black",

offset="j.2/.2",

clearance="+tO",

no_clip=True,

)

fig = maps.plot_grd(

grav_grid.basement_grav,

region=inversion_region,

fig_height=10,

title="Basement gravity",

hist=True,

cbar_label="mGal",

fig=fig,

origin_shift="xshift",

robust=True,

)

fig.text(

position="TL",

text="d",

fill="white",

pen=True,

font="16p,Helvetica,black",

offset="j.2/.2",

clearance="+tO",

no_clip=True,

)

fig = maps.plot_grd(

grav_grid.gravity_anomaly,

region=inversion_region,

fig_height=10,

title="Observed gravity",

hist=True,

cbar_label="mGal",

fig=fig,

origin_shift="both",

yshift_amount=0.5,

robust=True,

)

fig.text(

position="TL",

text="e",

fill="white",

pen=True,

font="16p,Helvetica,black",

offset="j.2/.2",

clearance="+tO",

no_clip=True,

)

fig.show()

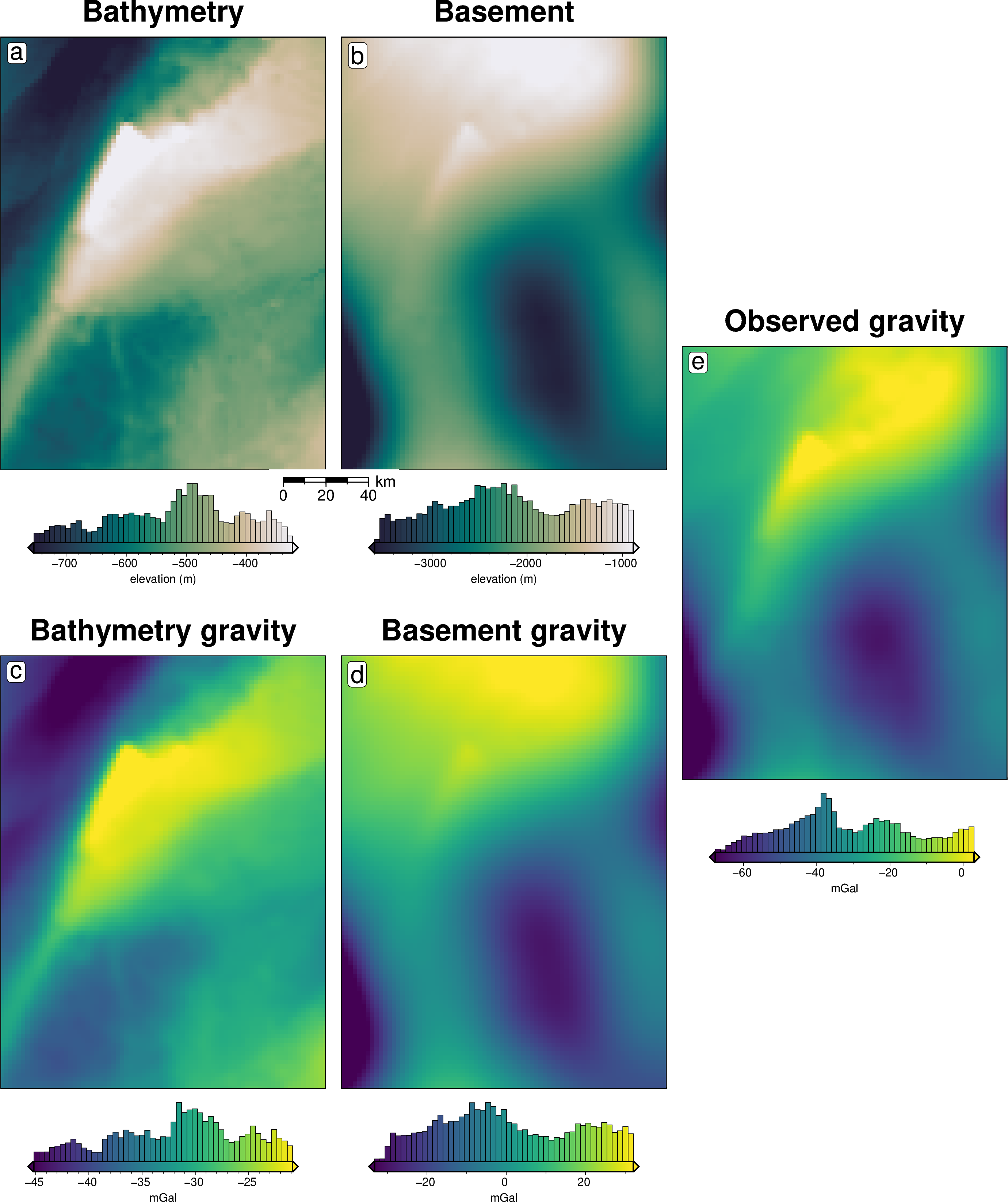

Make starting bathymetry model¶

[12]:

# semi-regularly spaced

constraint_points = synthetic.constraint_layout_number(

shape=(6, 7),

region=inversion_region,

padding=-spacing,

shapefile="../results/Ross_Sea/Ross_Sea_outline.shp",

add_outside_points=True,

grid_spacing=spacing,

)

# sample true topography at these points

constraint_points = utils.sample_grids(

constraint_points,

bathymetry,

"true_upward",

coord_names=("easting", "northing"),

)

constraint_points["upward"] = constraint_points.true_upward

constraint_points.head()

[12]:

| northing | easting | inside | true_upward | upward | |

|---|---|---|---|---|---|

| 0 | -1600000.0 | -40000.0 | False | -601.093994 | -601.093994 |

| 1 | -1600000.0 | -38000.0 | False | -609.216919 | -609.216919 |

| 2 | -1600000.0 | -36000.0 | False | -616.355957 | -616.355957 |

| 3 | -1600000.0 | -34000.0 | False | -621.262268 | -621.262268 |

| 4 | -1600000.0 | -32000.0 | False | -625.510925 | -625.510925 |

[13]:

# grid the sampled values using verde

starting_topography_kwargs = dict(

method="splines",

region=buffer_region,

spacing=spacing,

constraints_df=constraint_points,

dampings=None,

)

starting_bathymetry = utils.create_topography(**starting_topography_kwargs)

starting_bathymetry

[13]:

<xarray.DataArray 'scalars' (northing: 121, easting: 96)> Size: 93kB

array([[-541.24413869, -544.57181187, -547.92293689, ..., -360.00006254,

-357.06767408, -354.19957766],

[-543.34402688, -546.81675803, -550.35256333, ..., -362.90253226,

-359.96874158, -357.11431886],

[-545.05533622, -548.66036838, -552.37518163, ..., -365.66137905,

-362.73269531, -359.90052824],

...,

[-591.95335283, -595.518822 , -599.06869705, ..., -440.89315875,

-440.6944619 , -440.40553782],

[-590.53134833, -594.09076637, -597.64079288, ..., -440.69158328,

-440.42525249, -440.07197234],

[-589.16632671, -592.73504777, -596.30209679, ..., -440.51760947,

-440.1713932 , -439.74434037]], shape=(121, 96))

Coordinates:

* northing (northing) float64 968B -1.62e+06 -1.618e+06 ... -1.38e+06

* easting (easting) float64 768B -6e+04 -5.8e+04 ... 1.28e+05 1.3e+05

Attributes:

metadata: Generated by SplineCV(cv=KFold(n_splits=5, random_state=0, shu...

damping: None[14]:

# sample the inverted topography at the constraint points

constraint_points = utils.sample_grids(

constraint_points,

starting_bathymetry,

"starting_bathymetry",

coord_names=("easting", "northing"),

)

constraint_points["dif"] = (

constraint_points.true_upward - constraint_points.starting_bathymetry

)

rmse = utils.rmse(constraint_points.dif)

print(f"RMSE: {rmse:.2f} m")

constraint_points

RMSE: 0.03 m

[14]:

| northing | easting | inside | true_upward | upward | starting_bathymetry | dif | |

|---|---|---|---|---|---|---|---|

| 0 | -1.600000e+06 | -4.000000e+04 | False | -601.093994 | -601.093994 | -601.093994 | 0.000000 |

| 1 | -1.600000e+06 | -3.800000e+04 | False | -609.216919 | -609.216919 | -609.216919 | 0.000000 |

| 2 | -1.600000e+06 | -3.600000e+04 | False | -616.355957 | -616.355957 | -616.355957 | 0.000000 |

| 3 | -1.600000e+06 | -3.400000e+04 | False | -621.262268 | -621.262268 | -621.262268 | 0.000000 |

| 4 | -1.600000e+06 | -3.200000e+04 | False | -625.510925 | -625.510925 | -625.510925 | 0.000000 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 881 | -1.418571e+06 | -3.637979e-12 | True | -747.305711 | -747.305711 | -747.289192 | -0.016520 |

| 882 | -1.418571e+06 | 2.333333e+04 | True | -619.672055 | -619.672055 | -619.459742 | -0.212313 |

| 883 | -1.418571e+06 | 4.666667e+04 | True | -505.761536 | -505.761536 | -505.739808 | -0.021728 |

| 884 | -1.418571e+06 | 7.000000e+04 | True | -447.753091 | -447.753091 | -447.782831 | 0.029740 |

| 885 | -1.418571e+06 | 9.333333e+04 | True | -395.004206 | -395.004206 | -395.079663 | 0.075456 |

886 rows × 7 columns

[15]:

# compare starting and actual bathymetry grids

grids = polar_utils.grd_compare(

bathymetry,

starting_bathymetry,

fig_height=10,

plot=True,

cmap="rain",

reverse_cpt=True,

diff_cmap="balance+h0",

grid1_name="True bathymetry",

grid2_name="Starting bathymetry",

title="Difference",

title_font="18p,Helvetica-Bold,black",

cbar_unit="m",

cbar_label="elevation",

RMSE_decimals=0,

region=inversion_region,

inset=False,

hist=True,

label_font="16p,Helvetica,black",

points=constraint_points[constraint_points.inside],

points_style="x.2c",

)

[16]:

# the true density contrast is 1476 kg/m3

density_contrast = 1350

# set the reference level from the prisms to 0

zref = 0

density_grid = xr.where(

starting_bathymetry >= zref,

density_contrast,

-density_contrast,

)

# create layer of prisms

starting_prisms = utils.grids_to_prisms(

starting_bathymetry,

zref,

density=density_grid,

)

grav_df["starting_gravity"] = starting_prisms.prism_layer.gravity(

coordinates=(

grav_df.easting,

grav_df.northing,

grav_df.upward,

),

field="g_z",

progressbar=True,

)

grav_df

[16]:

| northing | easting | upward | bathymetry_grav | basement_grav_normalized | basement_grav | gravity_anomaly | starting_gravity | |

|---|---|---|---|---|---|---|---|---|

| 0 | -1600000.0 | -40000.0 | 1000.0 | -35.551054 | -0.575610 | -36.298772 | -71.849826 | -32.541367 |

| 1 | -1600000.0 | -38000.0 | 1000.0 | -36.054657 | -0.523194 | -33.940048 | -69.994704 | -32.965831 |

| 2 | -1600000.0 | -36000.0 | 1000.0 | -36.473146 | -0.466341 | -31.381660 | -67.854806 | -33.347648 |

| 3 | -1600000.0 | -34000.0 | 1000.0 | -36.755608 | -0.406001 | -28.666395 | -65.422003 | -33.644496 |

| 4 | -1600000.0 | -32000.0 | 1000.0 | -36.951029 | -0.343146 | -25.837899 | -62.788928 | -33.840063 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 7671 | -1400000.0 | 102000.0 | 1000.0 | -25.760090 | 0.399165 | 7.566093 | -18.193997 | -23.321506 |

| 7672 | -1400000.0 | 104000.0 | 1000.0 | -25.911429 | 0.334795 | 4.669468 | -21.241961 | -23.482116 |

| 7673 | -1400000.0 | 106000.0 | 1000.0 | -26.032814 | 0.268739 | 1.696911 | -24.335902 | -23.605602 |

| 7674 | -1400000.0 | 108000.0 | 1000.0 | -26.121903 | 0.201713 | -1.319231 | -27.441134 | -23.693171 |

| 7675 | -1400000.0 | 110000.0 | 1000.0 | -26.206160 | 0.134416 | -4.347629 | -30.553790 | -23.763780 |

7676 rows × 8 columns

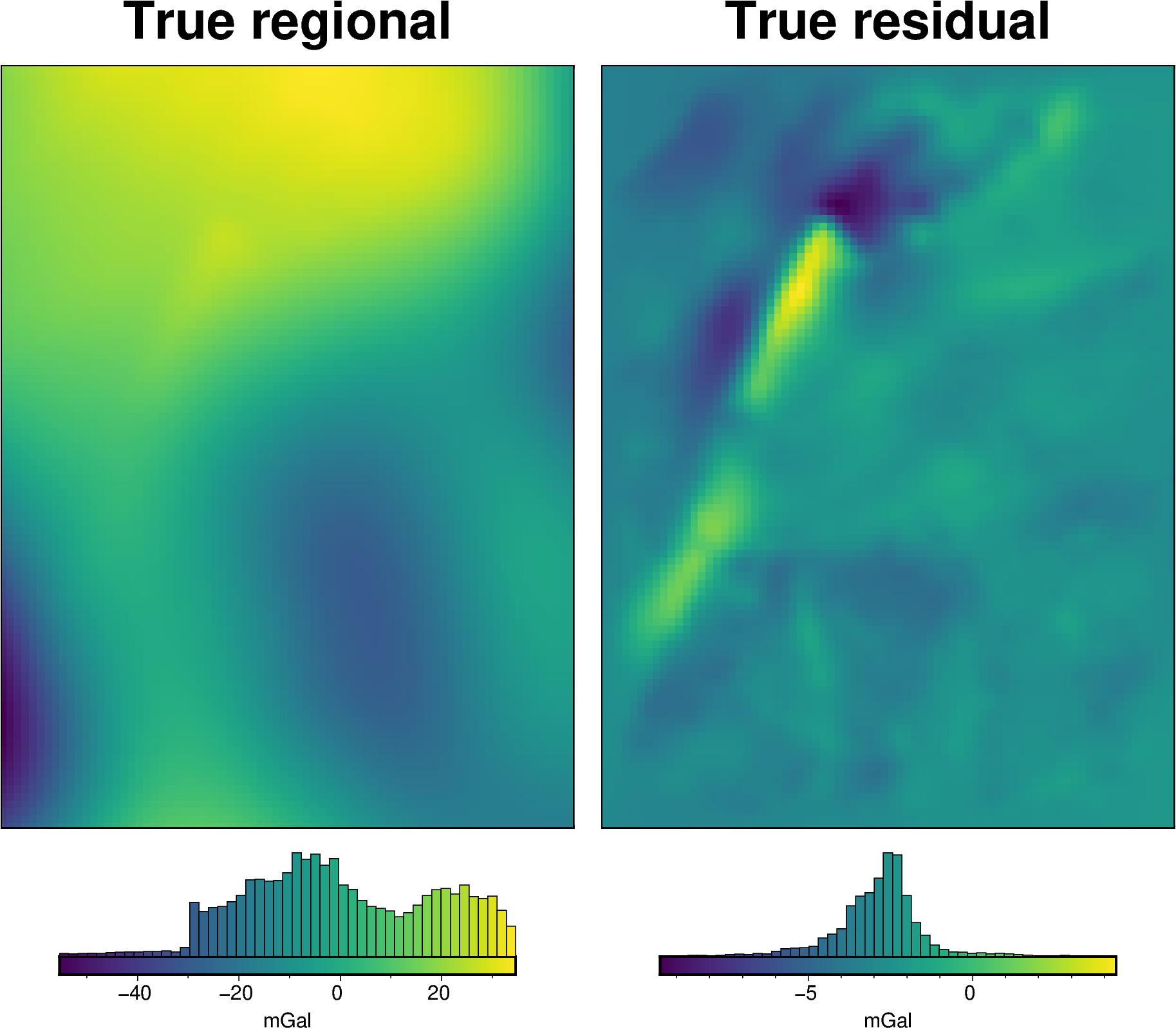

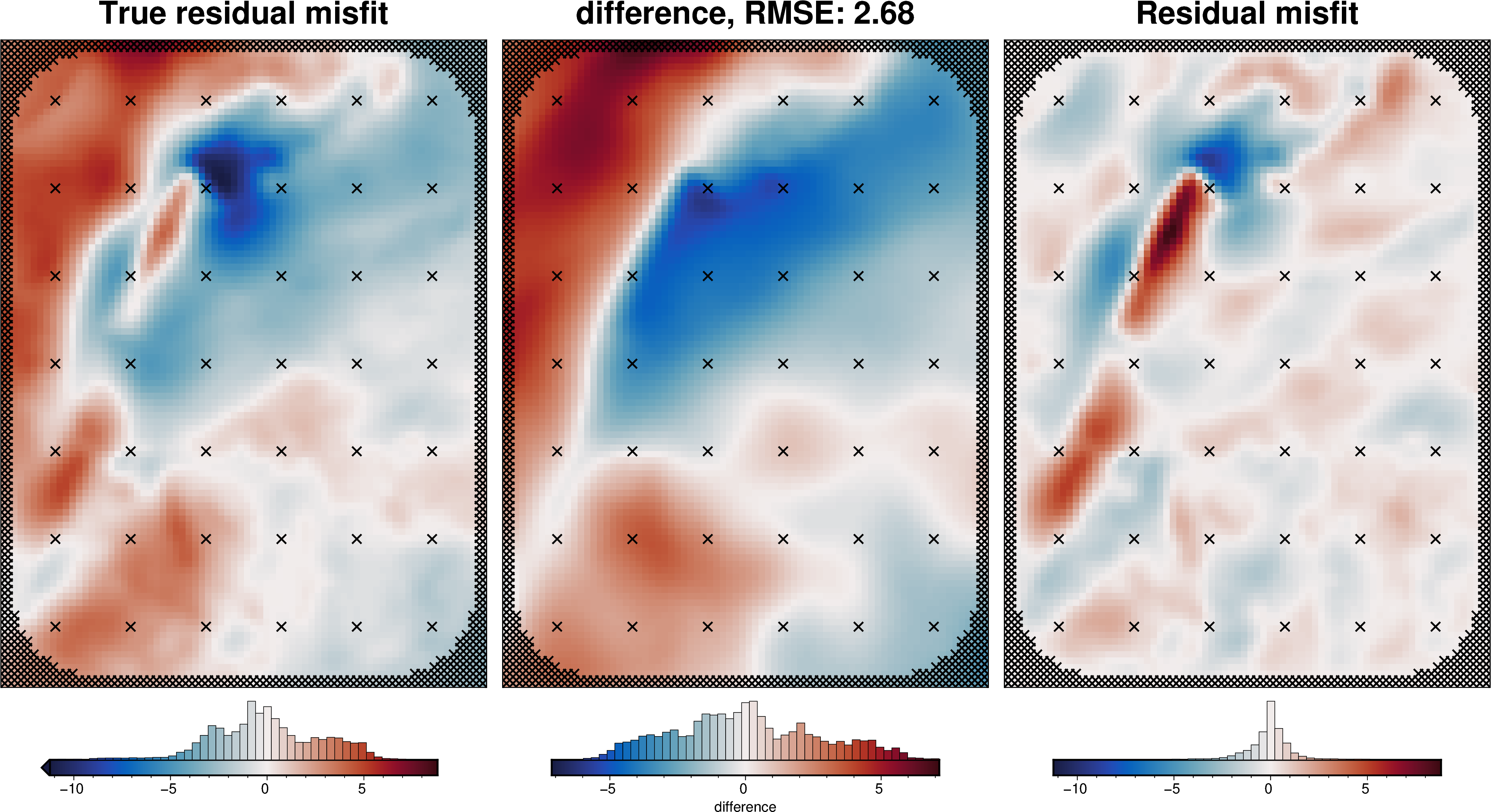

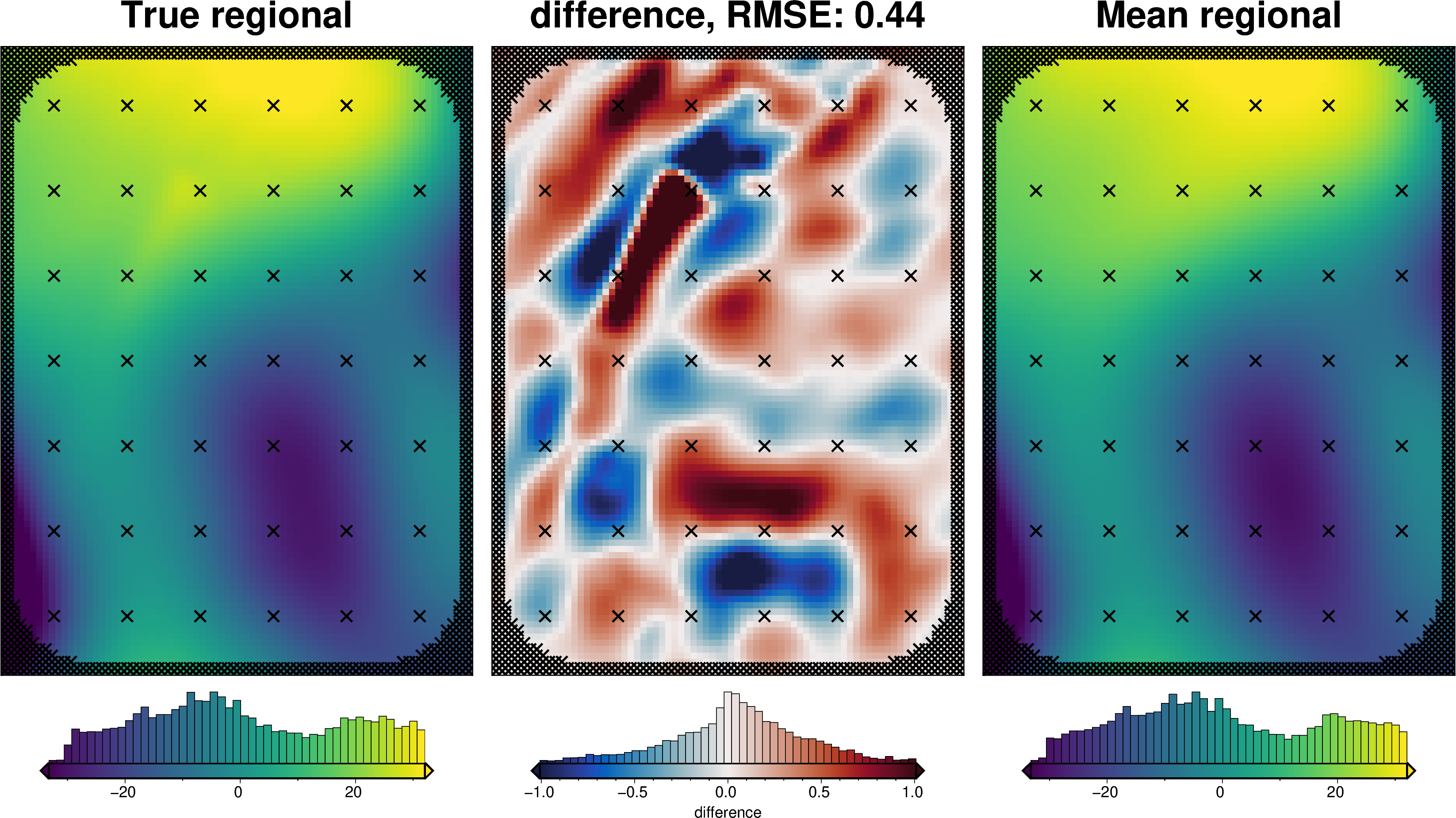

Regional misfit¶

[17]:

# calculate the true residual misfit

grav_df["true_res"] = grav_df.bathymetry_grav - grav_df.starting_gravity

# grid the results

grav_grid = grav_df.set_index(["northing", "easting"]).to_xarray()

fig = maps.plot_grd(

grav_grid.basement_grav,

fig_height=10,

title="True regional",

hist=True,

cbar_label="mGal",

)

fig = maps.plot_grd(

grav_grid.true_res,

fig=fig,

origin_shift="xshift",

fig_height=10,

title="True residual",

hist=True,

cbar_label="mGal",

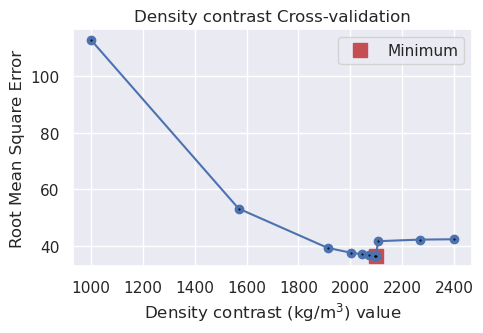

)

fig.show()

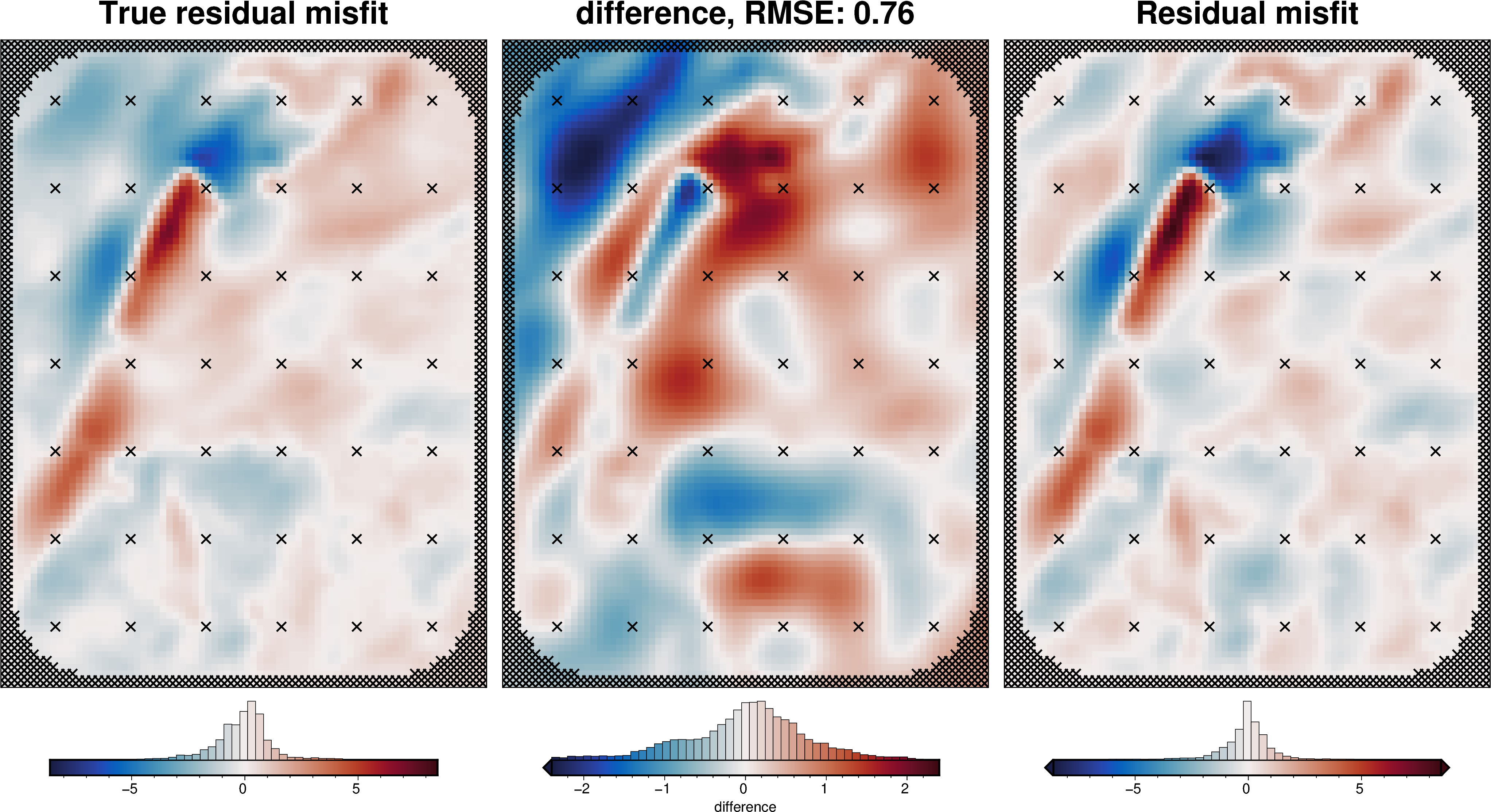

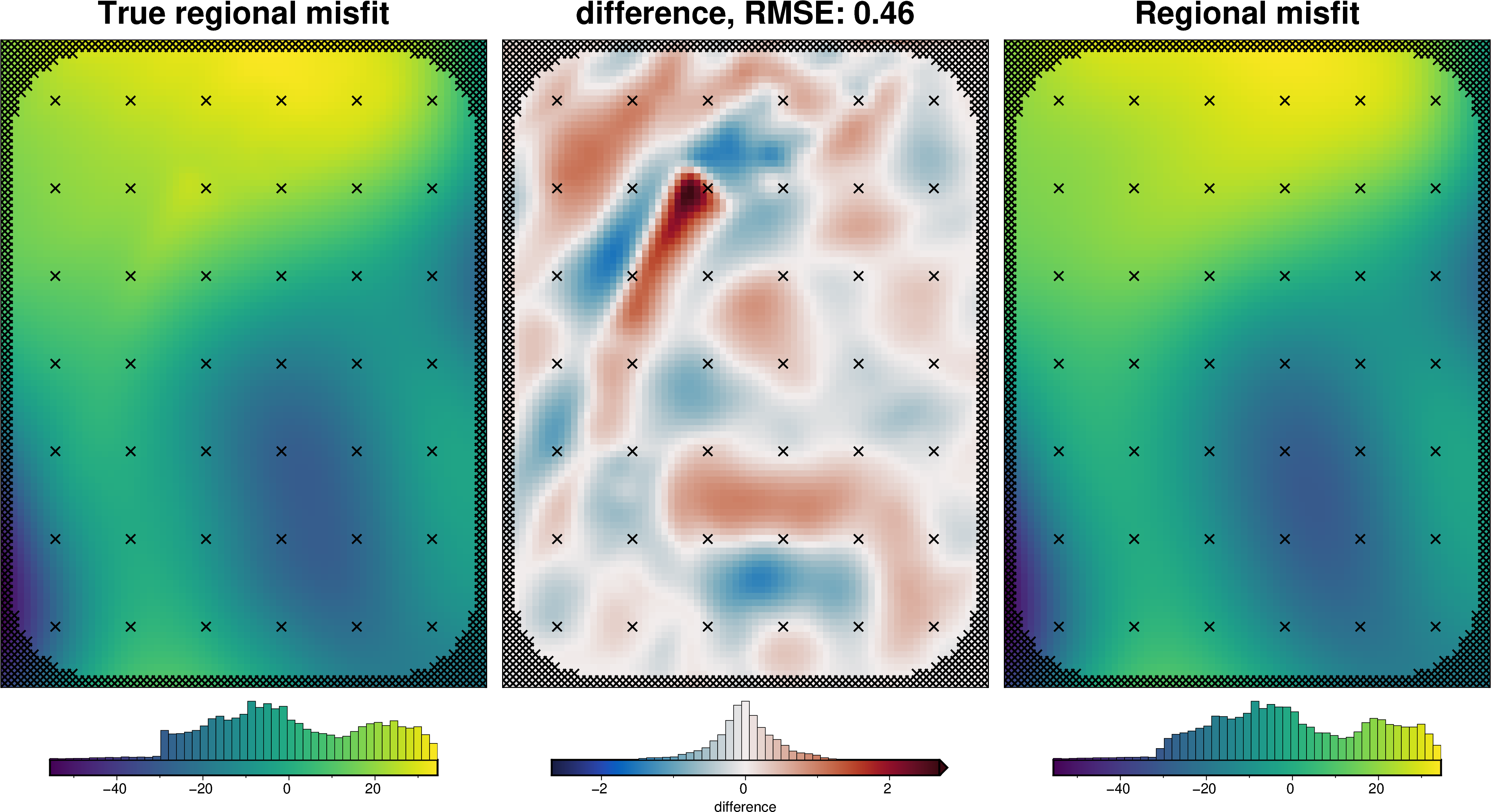

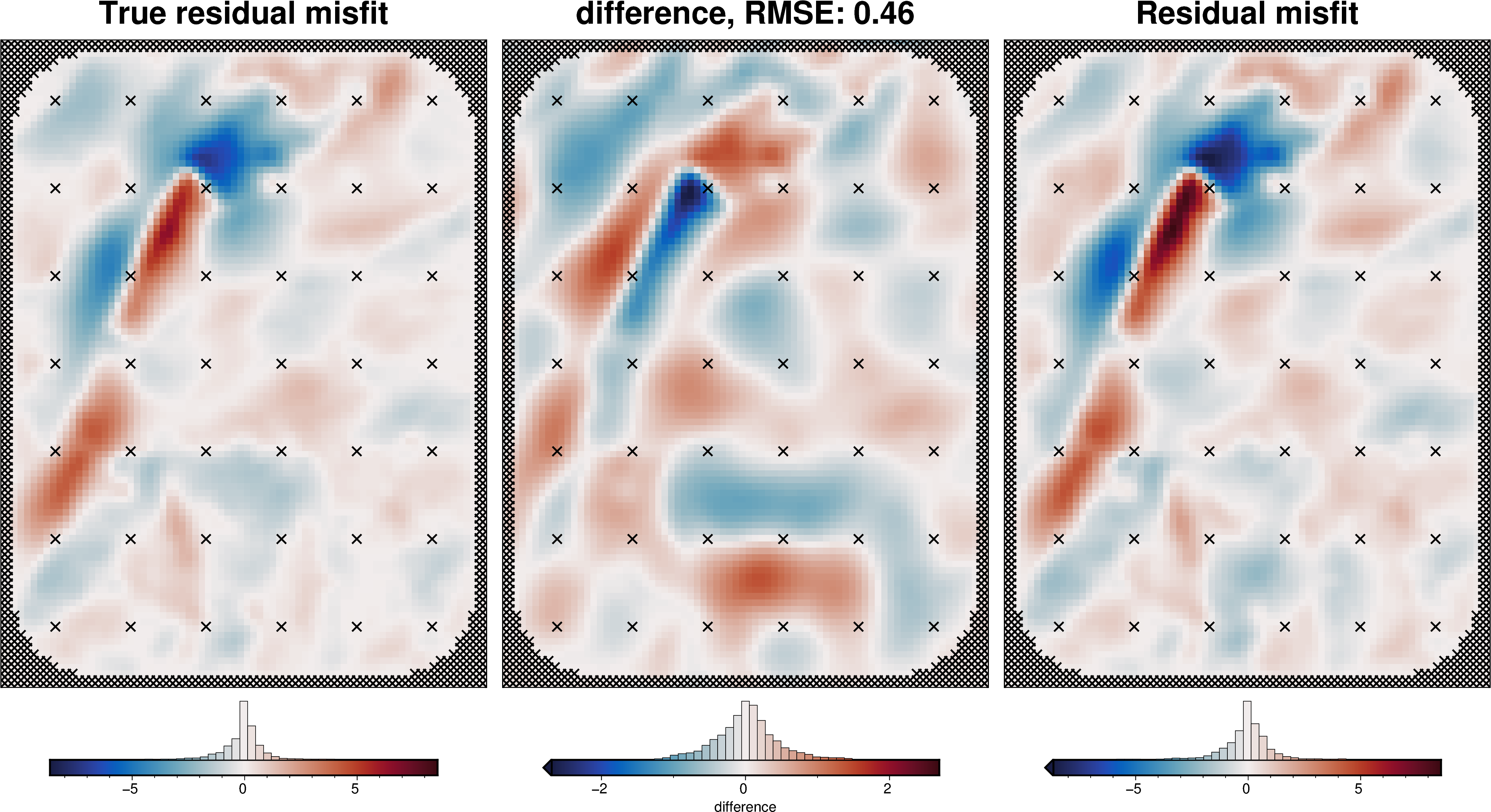

[18]:

def regional_comparison(df):

# grid the results

grav_grid = df.set_index(["northing", "easting"]).to_xarray()

# calculate the true residual and regional misfit

grav_grid["true_res"] = grav_grid.bathymetry_grav - grav_grid.starting_gravity

grav_grid["true_reg"] = grav_grid.basement_grav

# compare with true regional

_ = polar_utils.grd_compare(

grav_grid.true_reg - (grav_grid.true_reg.mean() - grav_grid.reg.mean()),

grav_grid.reg,

plot=True,

grid1_name="True regional misfit",

grid2_name="Regional misfit",

hist=True,

inset=False,

verbose="q",

title="difference",

grounding_line=False,

points=constraint_points,

points_style="x.3c",

)

# compare with true residual

_ = polar_utils.grd_compare(

grav_grid.true_res - (grav_grid.true_res.mean() - grav_grid.res.mean()),

grav_grid.res,

plot=True,

grid1_name="True residual misfit",

grid2_name="Residual misfit",

cmap="balance+h0",

hist=True,

inset=False,

verbose="q",

title="difference",

grounding_line=False,

points=constraint_points,

points_style="x.3c",

)

[19]:

# estimate regional with the mean misfit at constraints

regional_grav_kwargs = dict(

method="constraints",

grid_method="eq_sources",

constraints_df=constraint_points,

cv=True,

cv_kwargs=dict(

n_trials=20,

depth_limits=(100, 400e3),

progressbar=False,

fname="../tmp_outputs/Ross_Sea_04_regional_sep",

),

block_size=None,

damping=None,

)

[20]:

temp_reg_kwargs = copy.deepcopy(regional_grav_kwargs)

# temporarily set some kwargs

temp_reg_kwargs["cv_kwargs"]["plot"] = True

temp_reg_kwargs["cv_kwargs"]["progressbar"] = True

grav_df = regional.regional_separation(

grav_df=grav_df,

**temp_reg_kwargs,

)

regional_comparison(grav_df)

grav_df.describe()

[20]:

| northing | easting | upward | bathymetry_grav | basement_grav_normalized | basement_grav | gravity_anomaly | starting_gravity | true_res | misfit | reg | res | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 7.676000e+03 | 7676.000000 | 7676.0 | 7676.000000 | 7676.000000 | 7.676000e+03 | 7676.000000 | 7676.000000 | 7676.000000 | 7676.000000 | 7676.000000 | 7676.000000 |

| mean | -1.500000e+06 | 35000.000000 | 1000.0 | -31.727281 | 0.231030 | -7.583072e-15 | -31.727281 | -28.869499 | -2.857783 | -2.857783 | -2.757333 | -0.100450 |

| std | 5.831332e+04 | 43877.680138 | 0.0 | 6.458148 | 0.408910 | 1.840096e+01 | 18.705635 | 5.735684 | 1.373353 | 18.203440 | 18.308589 | 1.507758 |

| min | -1.600000e+06 | -40000.000000 | 1000.0 | -48.457772 | -1.000000 | -5.539633e+01 | -86.255473 | -44.455618 | -9.507972 | -58.143654 | -58.148433 | -8.610022 |

| 25% | -1.550000e+06 | -2500.000000 | 1000.0 | -36.286366 | -0.073820 | -1.371824e+01 | -44.456508 | -32.676771 | -3.516046 | -16.404558 | -16.478774 | -0.528250 |

| 50% | -1.500000e+06 | 35000.000000 | 1000.0 | -31.030069 | 0.181836 | -2.213697e+00 | -34.787733 | -28.426806 | -2.725032 | -5.023125 | -4.863039 | -0.005071 |

| 75% | -1.450000e+06 | 72500.000000 | 1000.0 | -27.278975 | 0.596584 | 1.644995e+01 | -18.710925 | -24.769776 | -2.210131 | 13.396719 | 13.664678 | 0.447635 |

| max | -1.400000e+06 | 110000.000000 | 1000.0 | -16.585798 | 1.000000 | 3.460367e+01 | 9.233533 | -15.540467 | 4.358587 | 32.411323 | 31.110975 | 8.509408 |

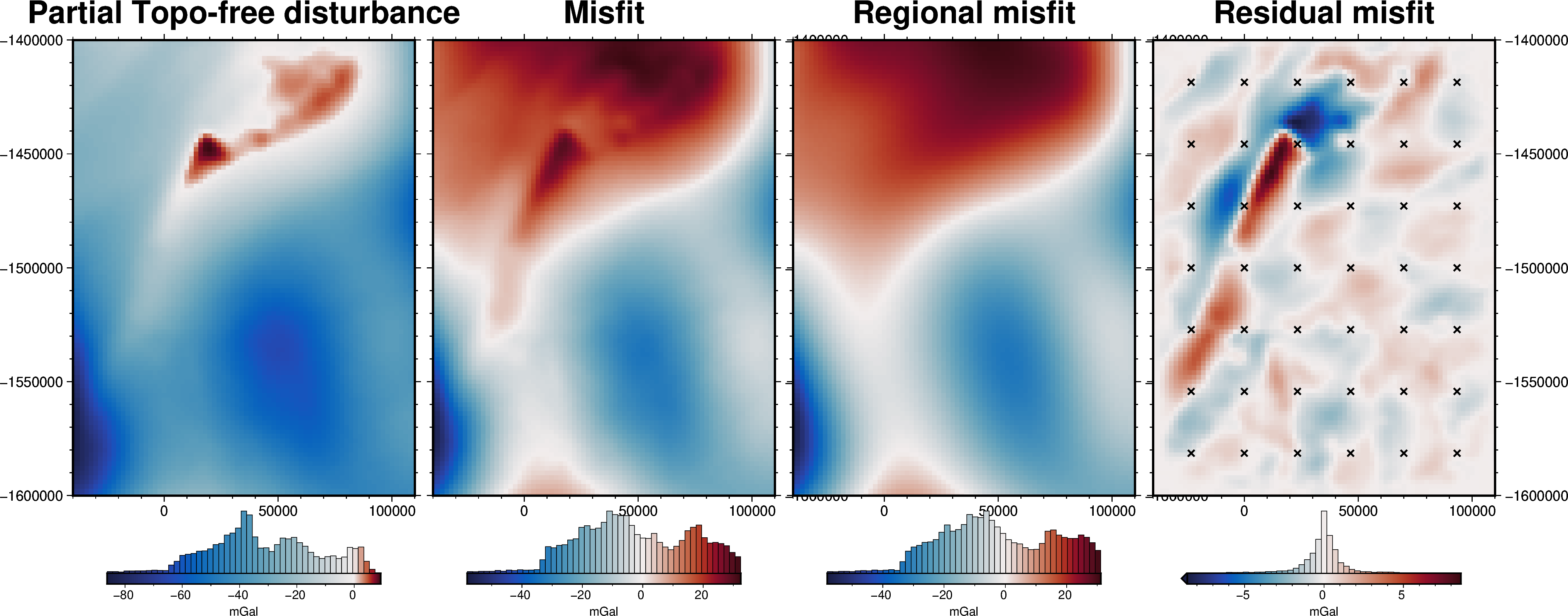

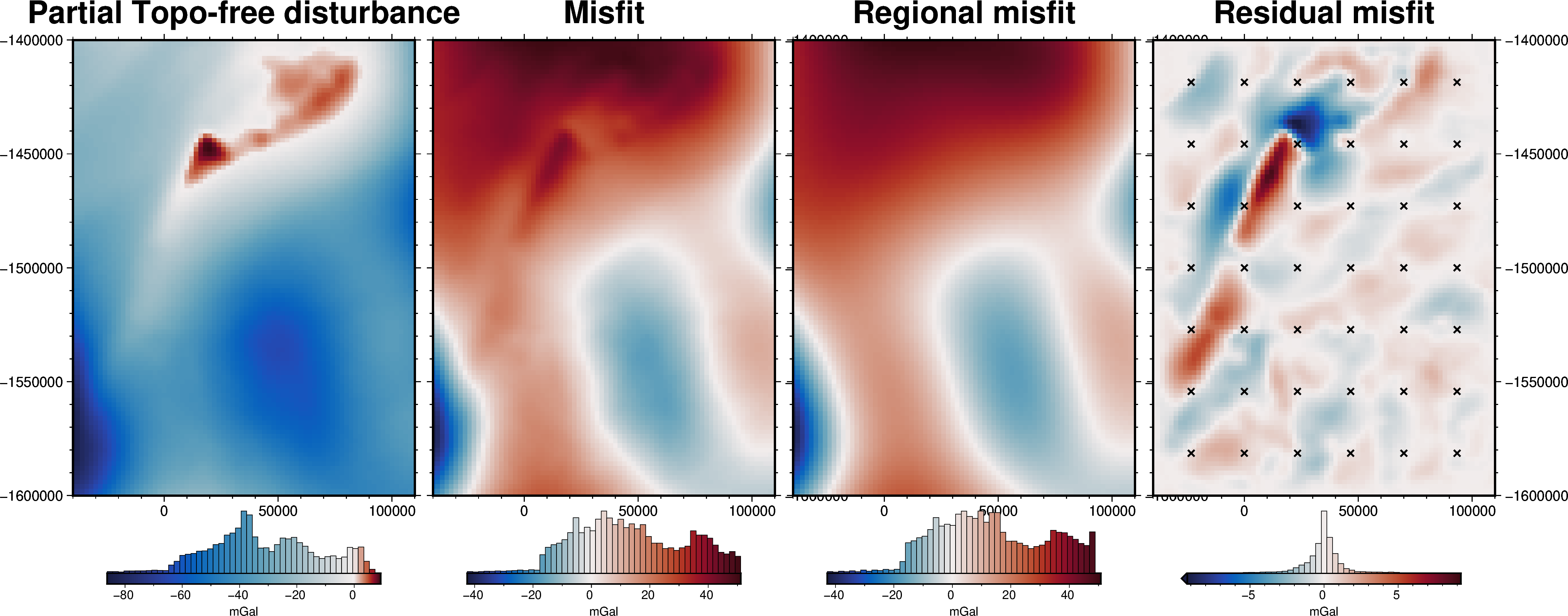

[21]:

grav_grid = grav_df.set_index(["northing", "easting"]).to_xarray()

fig = maps.plot_grd(

grav_grid.gravity_anomaly,

region=inversion_region,

fig_height=10,

title="Partial Topo-free disturbance",

cmap="balance+h0",

hist=True,

cbar_label="mGal",

frame=["nSWe", "xaf10000", "yaf10000"],

)

fig = maps.plot_grd(

grav_grid.misfit,

region=inversion_region,

fig=fig,

origin_shift="xshift",

fig_height=10,

title="Misfit",

cmap="balance+h0",

hist=True,

cbar_label="mGal",

frame=["nSwE", "xaf10000", "yaf10000"],

)

fig = maps.plot_grd(

grav_grid.reg,

region=inversion_region,

fig=fig,

origin_shift="xshift",

fig_height=10,

title="Regional misfit",

cmap="balance+h0",

hist=True,

cbar_label="mGal",

frame=["nSwE", "xaf10000", "yaf10000"],

)

fig = maps.plot_grd(

grav_grid.res,

region=inversion_region,

fig=fig,

origin_shift="xshift",

fig_height=10,

title="Residual misfit",

cmap="balance+h0",

cpt_lims=[-vd.maxabs(grav_grid.res), vd.maxabs(grav_grid.res)],

hist=True,

cbar_label="mGal",

frame=["nSwE", "xaf10000", "yaf10000"],

points=constraint_points[constraint_points.inside],

points_style="x.2c",

)

fig.show()

[22]:

# set kwargs to pass to the inversion

kwargs = {

# set stopping criteria

"max_iterations": 200,

"l2_norm_tolerance": 0.2**0.5, # square root of the gravity noise

"delta_l2_norm_tolerance": 1.008,

}

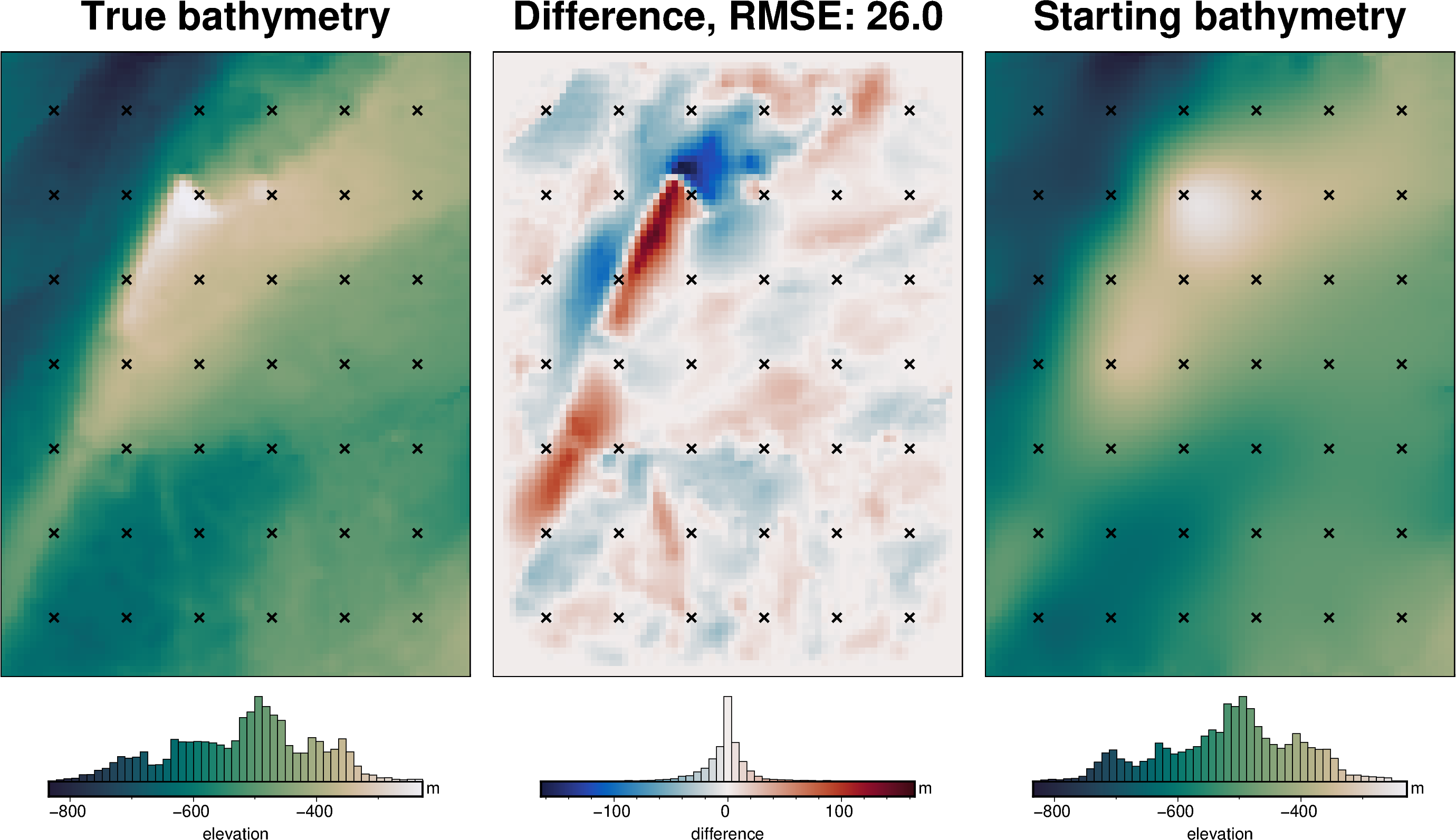

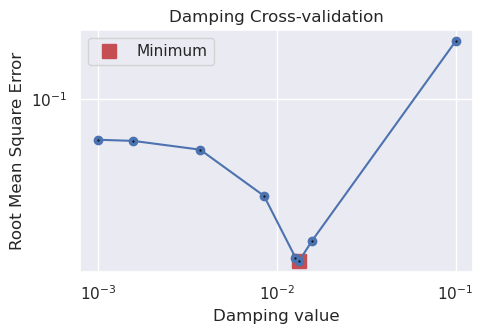

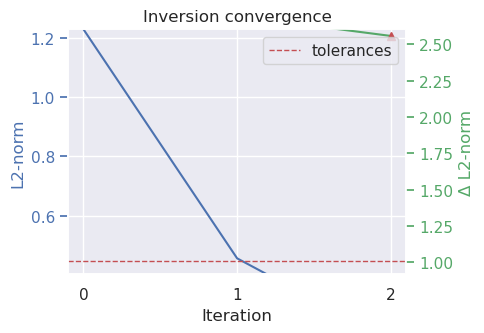

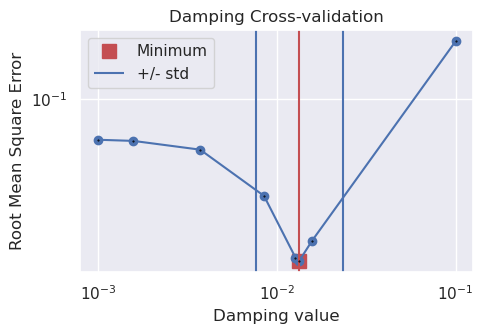

Damping Cross Validation¶

[44]:

logging.getLogger().setLevel(logging.INFO)

# run the inversion workflow, including a cross validation for the damping parameter

results = inversion.run_inversion_workflow(

grav_df=grav_df,

starting_prisms=starting_prisms,

# for creating test/train splits

grav_spacing=spacing,

inversion_region=inversion_region,

run_damping_cv=True,

damping_limits=(0.001, 0.1),

damping_cv_trials=8,

fname=f"{fpath}_damping_cv",

**kwargs,

)

[23]:

# load saved inversion results

with pathlib.Path(f"{fpath}_damping_cv_results.pickle").open("rb") as f:

results = pickle.load(f)

# load study

with pathlib.Path(f"{fpath}_damping_cv_damping_cv_study.pickle").open("rb") as f:

study = pickle.load(f)

# collect the results

topo_results, grav_results, parameters, elapsed_time = results

[24]:

best_damping = parameters.get("Solver damping")

kwargs["solver_damping"] = best_damping

best_damping

[24]:

0.013382994085308205

[25]:

study_df = study.trials_dataframe()

plotting.plot_cv_scores(

study_df.value,

study_df.params_damping,

param_name="Damping",

logx=True,

logy=True,

)

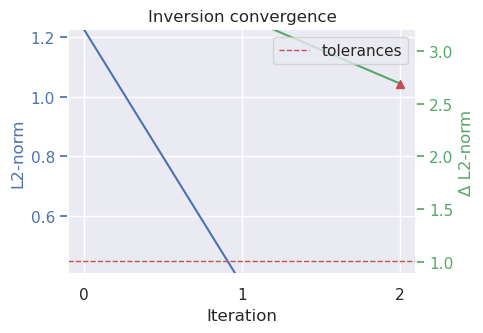

plotting.plot_convergence(

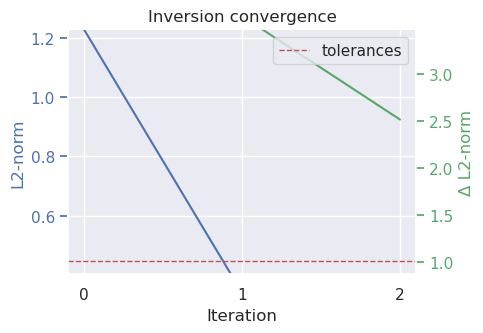

grav_results,

params=parameters,

)

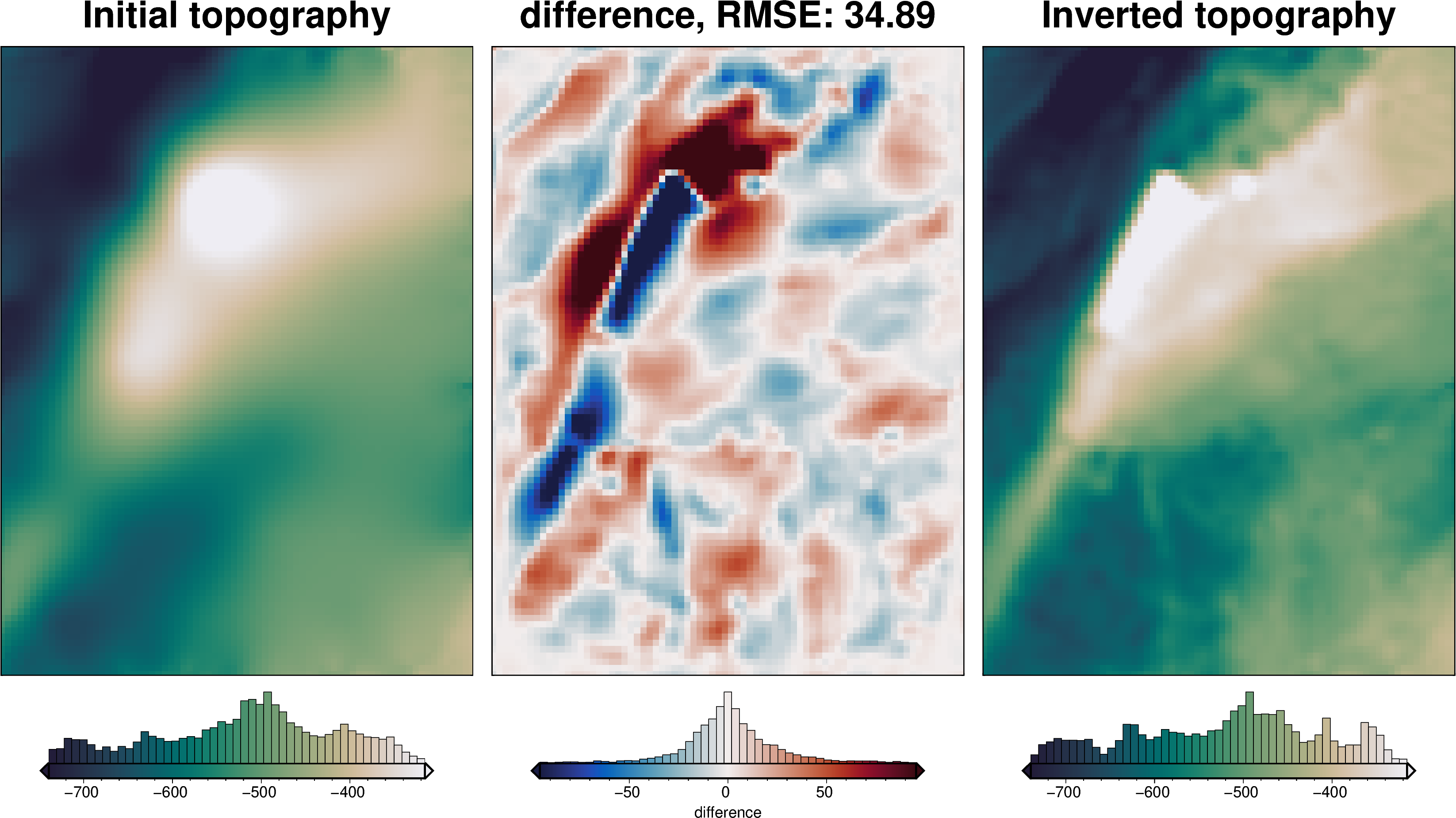

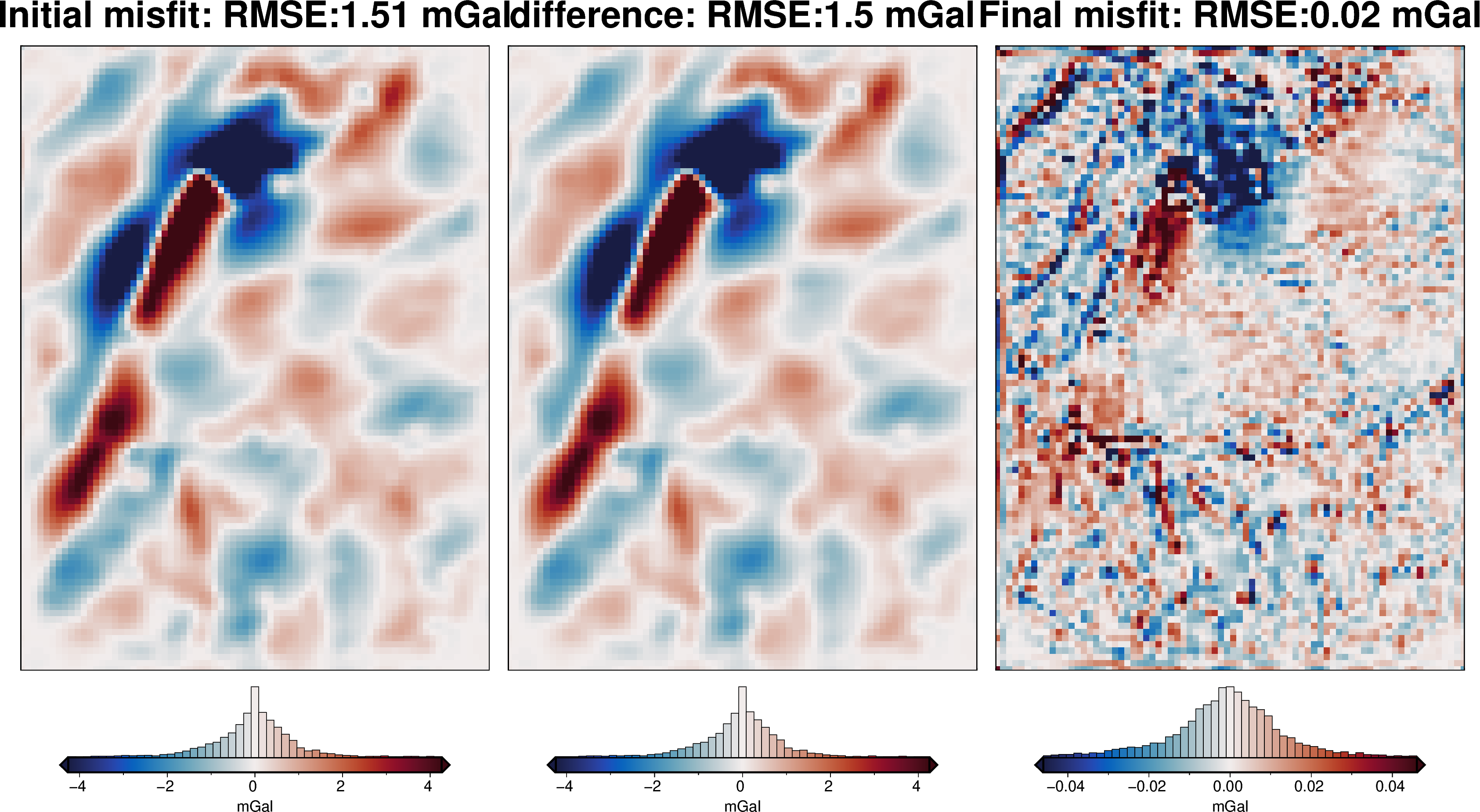

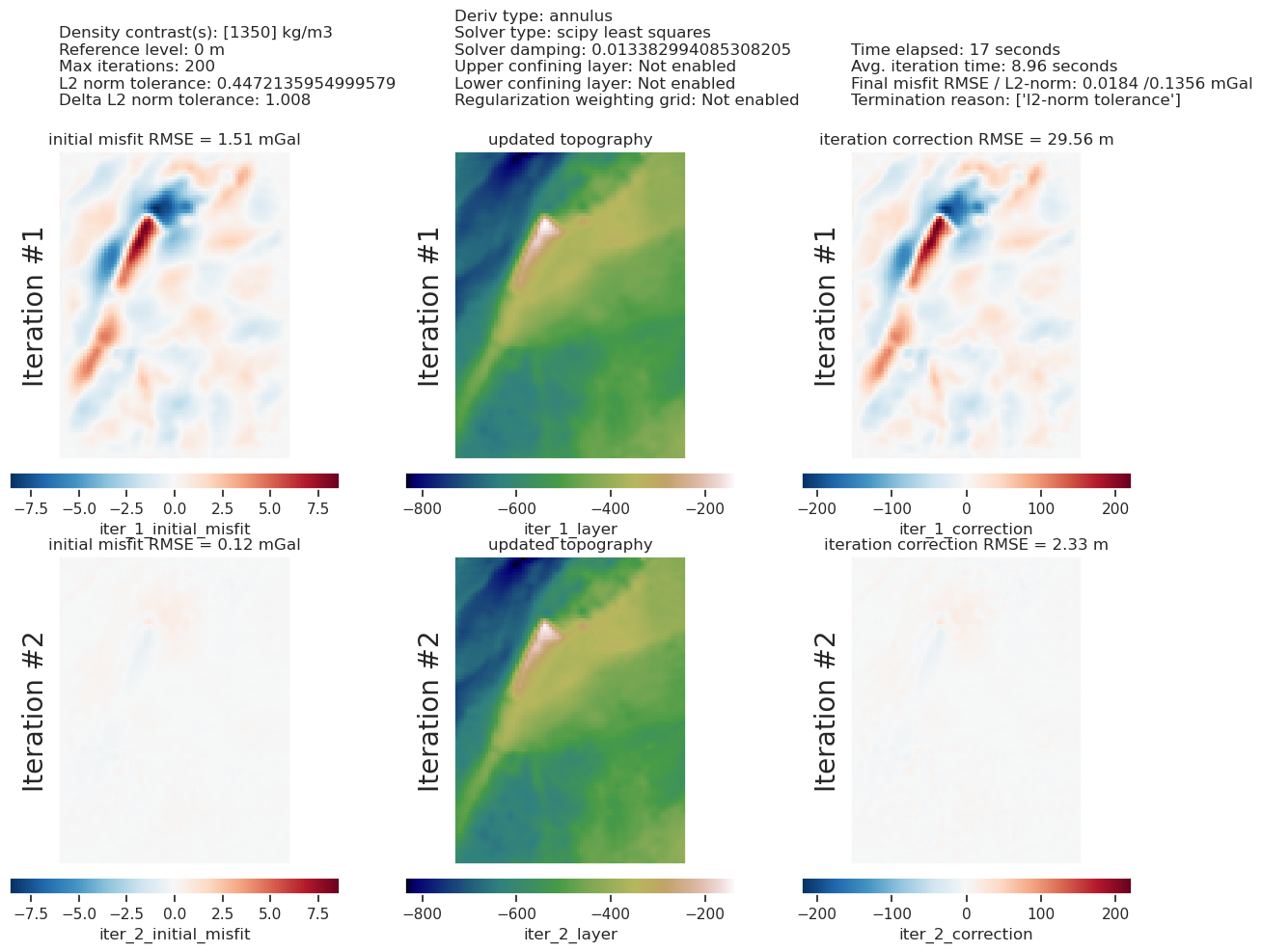

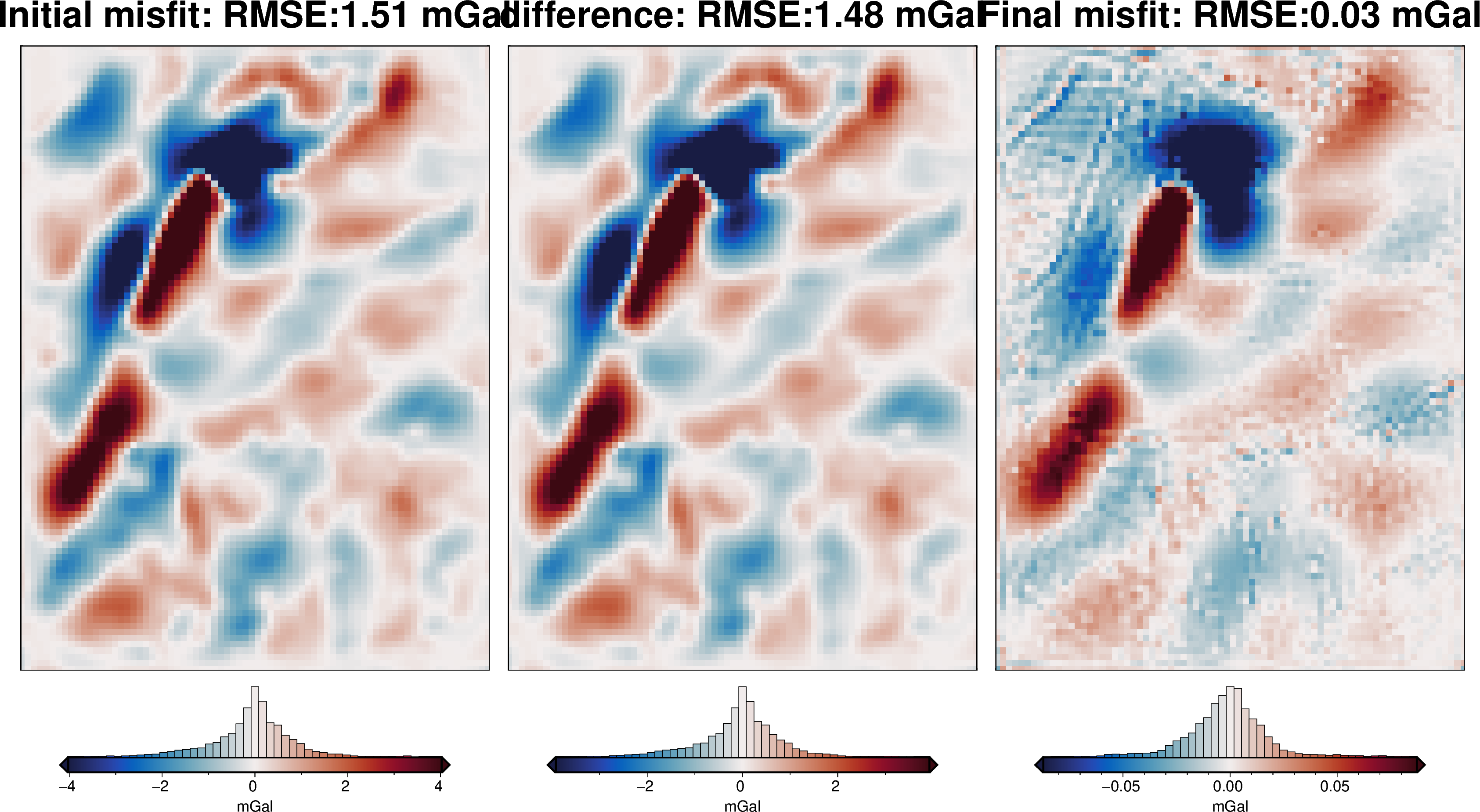

plotting.plot_inversion_results(

grav_results,

topo_results,

parameters,

inversion_region,

iters_to_plot=2,

plot_iter_results=True,

plot_topo_results=True,

plot_grav_results=True,

)

final_topography = topo_results.set_index(["northing", "easting"]).to_xarray().topo

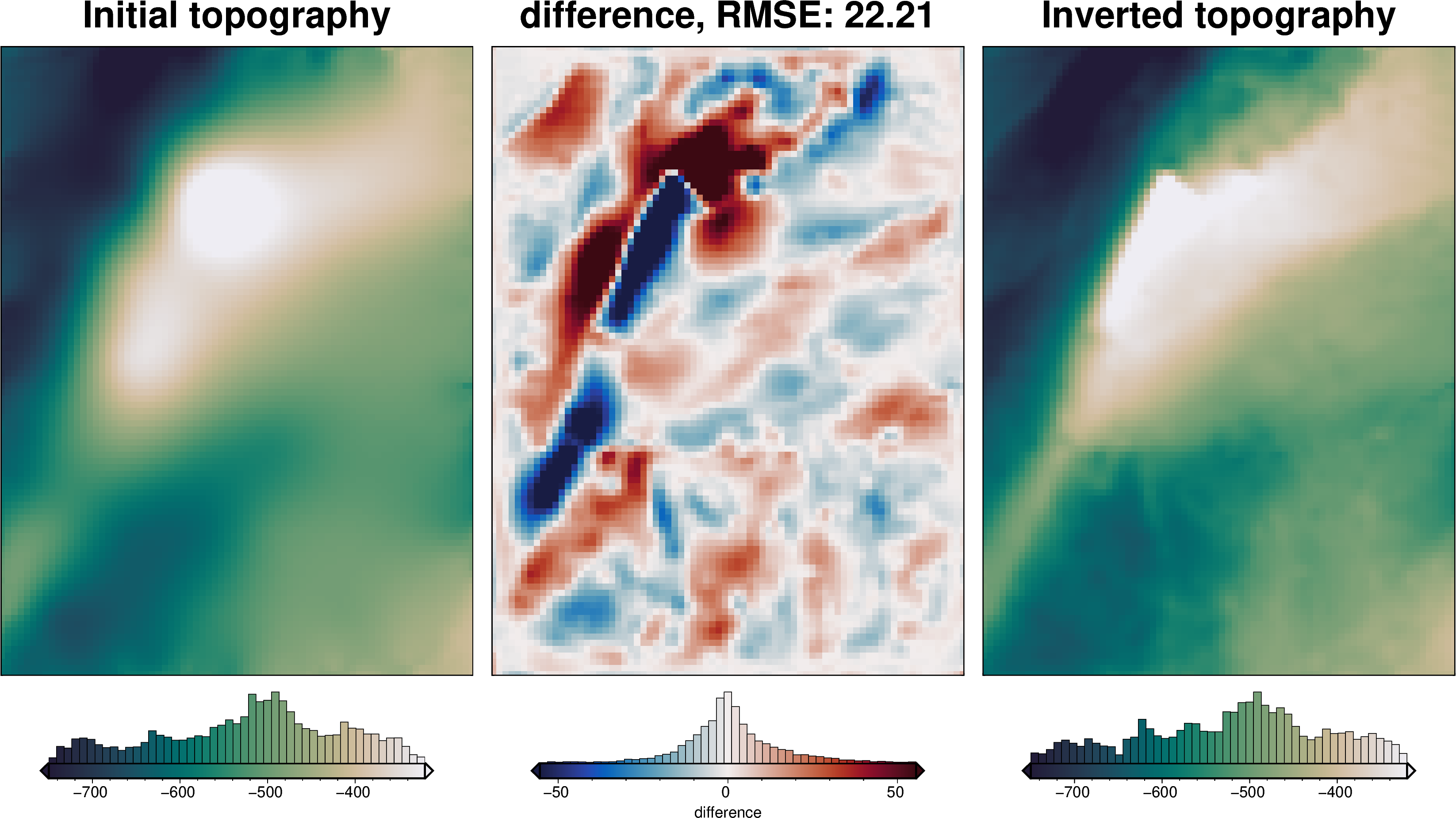

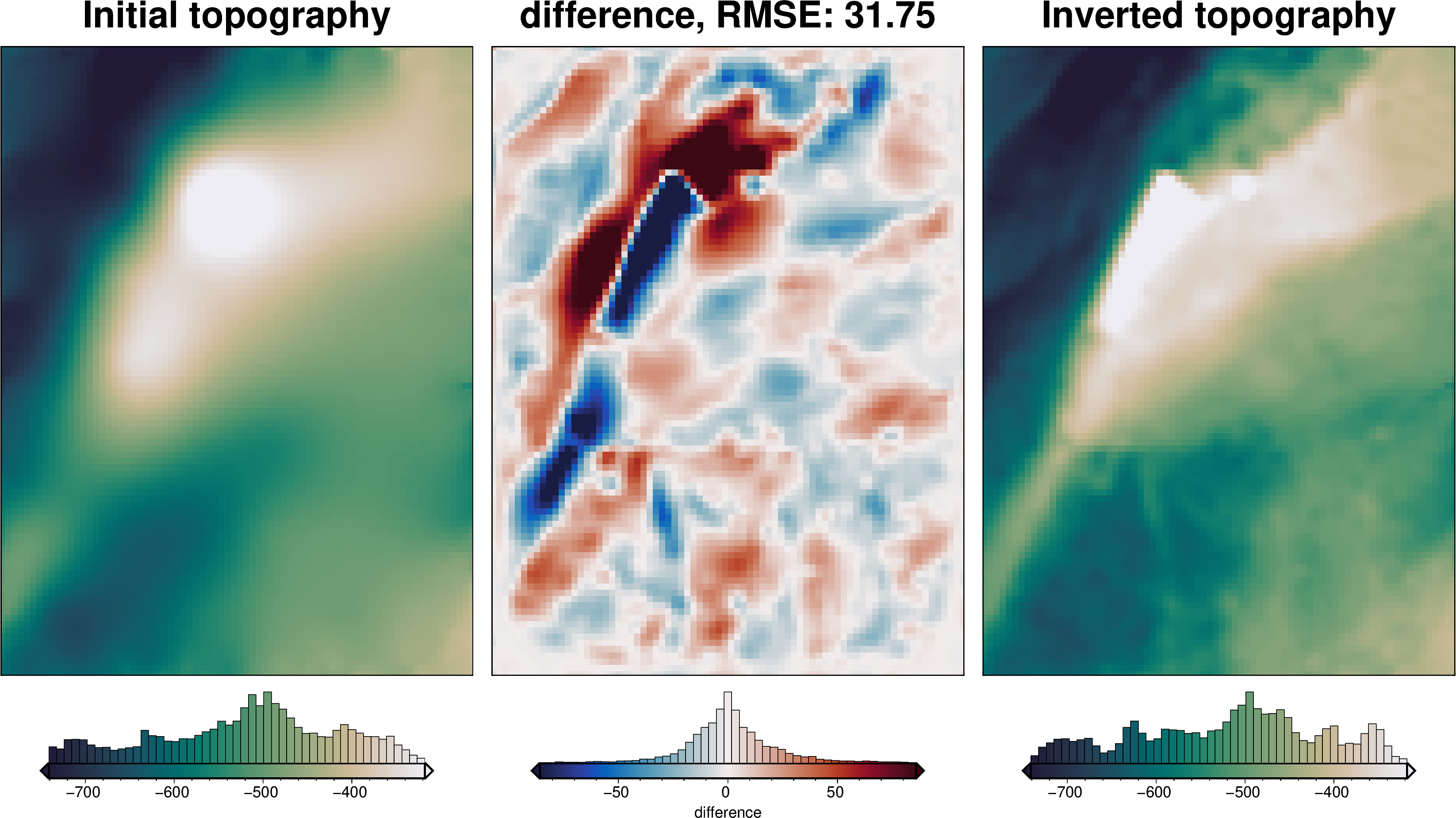

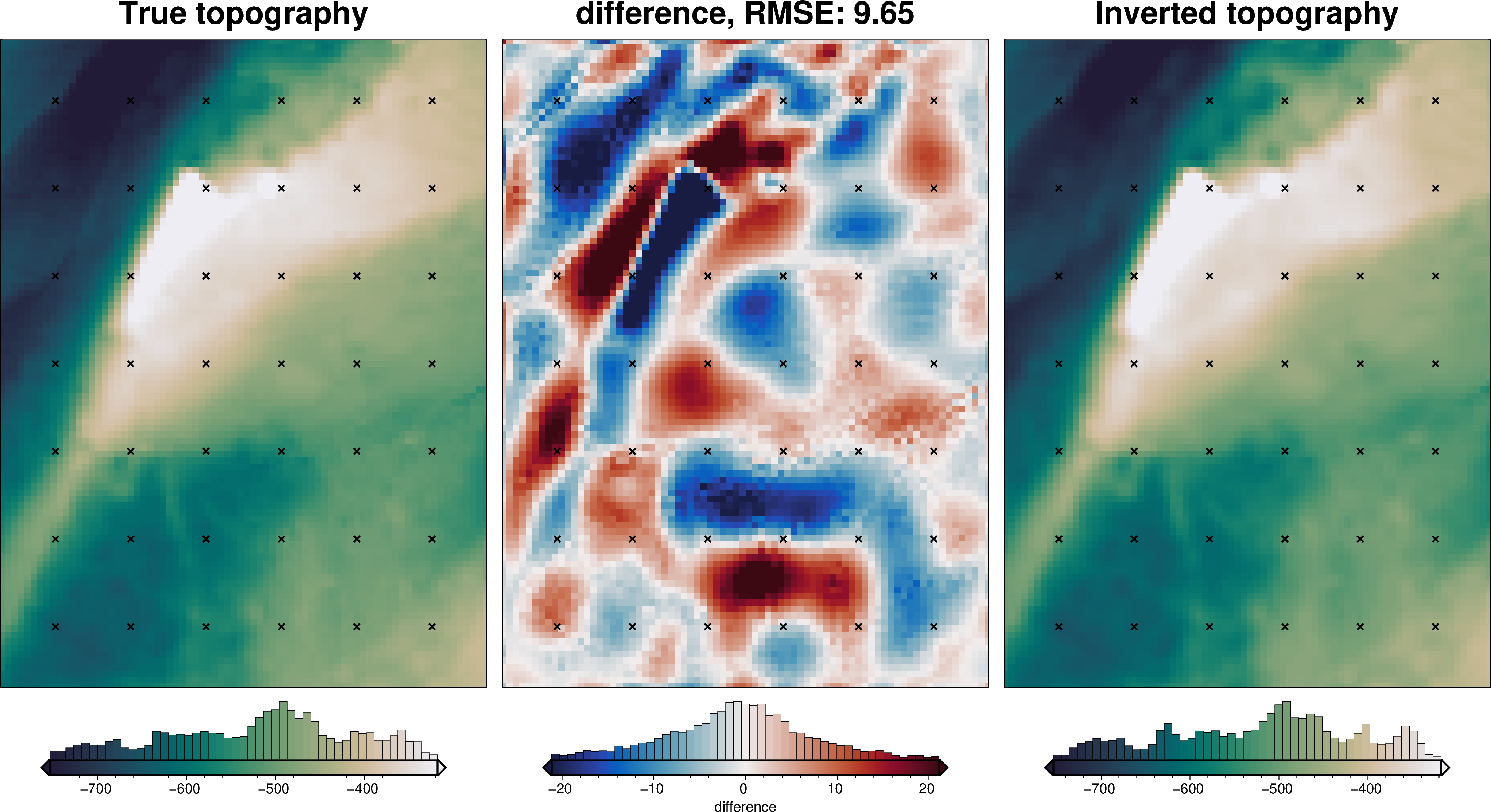

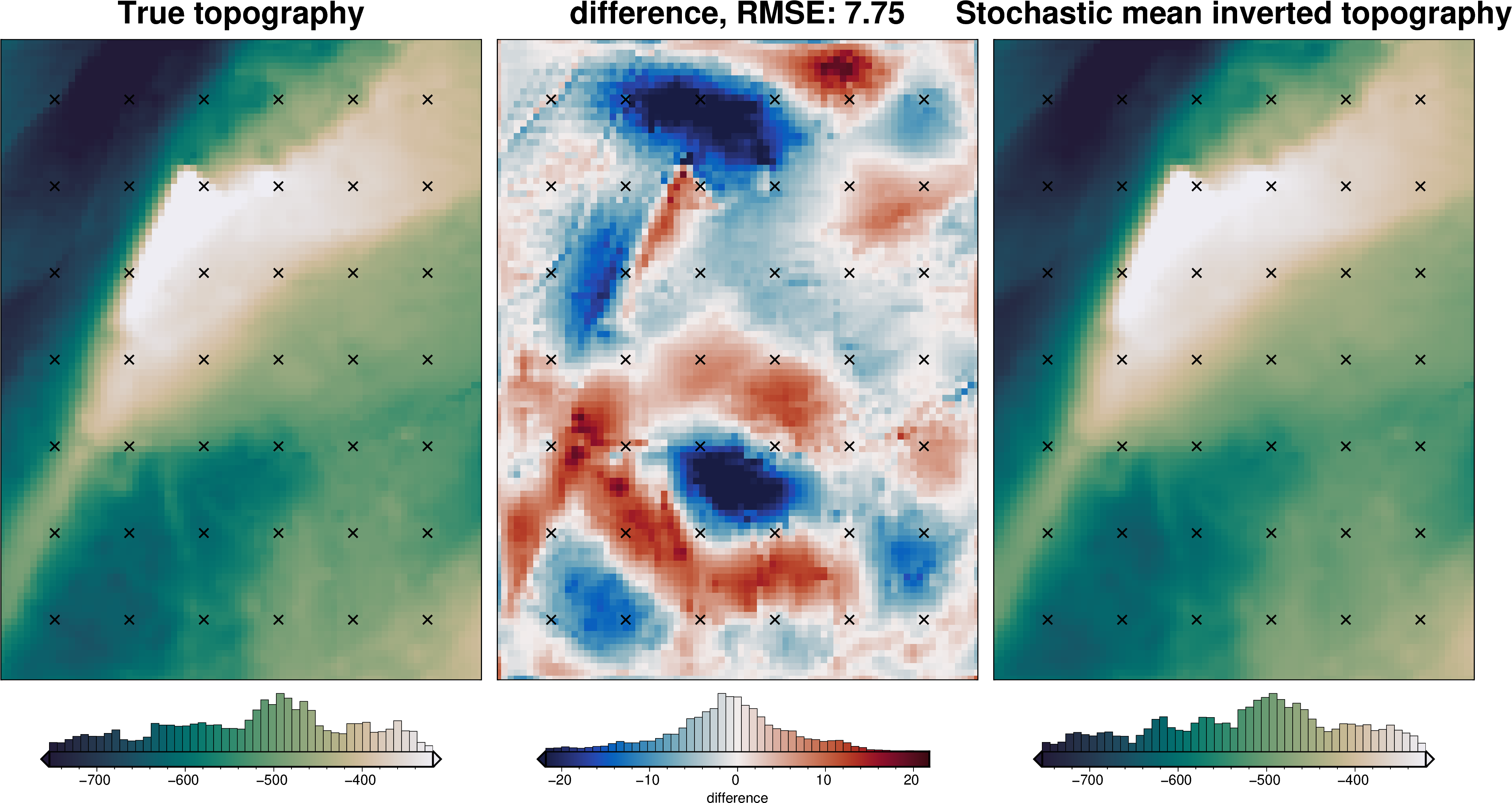

_ = polar_utils.grd_compare(

bathymetry,

final_topography,

region=inversion_region,

plot=True,

grid1_name="True topography",

grid2_name="Inverted topography",

robust=True,

hist=True,

inset=False,

verbose="q",

title="difference",

grounding_line=False,

reverse_cpt=True,

cmap="rain",

points=constraint_points[constraint_points.inside],

points_style="x.2c",

)

[26]:

# sample the inverted topography at the constraint points

constraint_points = utils.sample_grids(

constraint_points,

final_topography,

"inverted_topography",

coord_names=("easting", "northing"),

)

rmse = utils.rmse(constraint_points.upward - constraint_points.inverted_topography)

print(f"RMSE: {rmse:.2f} m")

RMSE: 5.35 m

Density CV¶

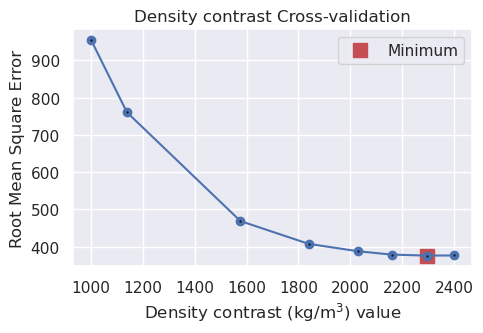

[49]:

# run the cross validation for density

study, inversion_results = optimization.optimize_inversion_zref_density_contrast(

grav_df=grav_df,

constraints_df=constraint_points,

density_contrast_limits=(1000, 2400),

zref=0,

n_trials=8,

starting_topography=starting_bathymetry,

regional_grav_kwargs=dict(

method="constant",

constant=0,

),

fname=f"{fpath}_density_cv",

**kwargs,

)

'starting_gravity' already a column of `grav_df`, but is being overwritten since calculate_starting_gravity is True

'reg' already a column of `grav_df`, but is being overwritten since calculate_regional_misfit is True

[50]:

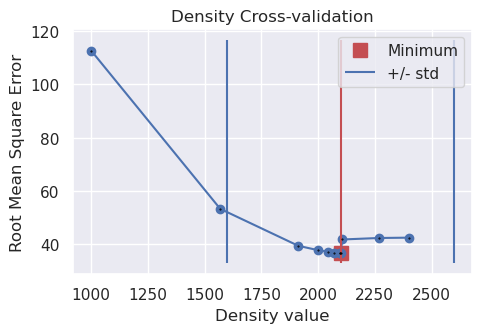

# run a 5-fold cross validation for 10 parameter sets of density

# this performs 50 regional separations and 50 inversions

study, inversion_results = optimization.optimize_inversion_zref_density_contrast_kfolds(

grav_df=grav_df,

constraints_df=constraint_points[constraint_points.inside],

density_contrast_limits=(1000, 2400),

zref=0,

n_trials=12,

split_kwargs=dict(

n_splits=4,

method="KFold",

),

seed=2,

regional_grav_kwargs=regional_grav_kwargs,

starting_topography_kwargs=starting_topography_kwargs,

fname=f"{fpath}_density_cv_kfolds",

fold_progressbar=False,

**kwargs,

)

'starting_gravity' already a column of `grav_df`, but is being overwritten since calculate_starting_gravity is True

'reg' already a column of `grav_df`, but is being overwritten since calculate_regional_misfit is True

[27]:

# load study from normal optimization

with pathlib.Path(f"{fpath}_density_cv_study.pickle").open("rb") as f:

study = pickle.load(f)

optimized_density = study.best_params["density_contrast"]

# load study from kfold optimization

with pathlib.Path(f"{fpath}_density_cv_kfolds_study.pickle").open("rb") as f:

study = pickle.load(f)

kfold_optimized_density = study.best_params["density_contrast"]

print("optimal density contrast from normal optimization: ", optimized_density)

print("optimal density contrast from K-folds optimization: ", kfold_optimized_density)

print("real density contrast", true_density_contrast)

best_density_contrast = min(

[optimized_density, kfold_optimized_density],

key=lambda x: abs(x - true_density_contrast),

)

optimal density contrast from normal optimization: 2294

optimal density contrast from K-folds optimization: 2099

real density contrast 1476

[28]:

print("optimal determined density contrast", best_density_contrast)

print("real density contrast", true_density_contrast)

print("density error", best_density_contrast - true_density_contrast)

optimal determined density contrast 2099

real density contrast 1476

density error 623

Redo with optimal density contrast¶

During the density cross-validation to avoid biasing the scores, we had to manually set a regional field. Now, with the optimal density contrast value found, we can rerun the inversion with an automatically determined regional field strength (the average value misfit at the constraints).

[29]:

density_contrast = best_density_contrast

density_grid = xr.where(

starting_bathymetry >= zref,

density_contrast,

-density_contrast,

)

# create layer of prisms

starting_prisms = utils.grids_to_prisms(

starting_bathymetry,

zref,

density=density_grid,

)

grav_df["starting_gravity"] = starting_prisms.prism_layer.gravity(

coordinates=(

grav_df.easting,

grav_df.northing,

grav_df.upward,

),

field="g_z",

progressbar=True,

)

grav_df = regional.regional_separation(

grav_df=grav_df,

**regional_grav_kwargs,

)

regional_comparison(grav_df)

[30]:

grav_grid = grav_df.set_index(["northing", "easting"]).to_xarray()

fig = maps.plot_grd(

grav_grid.gravity_anomaly,

region=inversion_region,

fig_height=10,

title="Partial Topo-free disturbance",

cmap="balance+h0",

hist=True,

cbar_label="mGal",

frame=["nSWe", "xaf10000", "yaf10000"],

)

fig = maps.plot_grd(

grav_grid.misfit,

region=inversion_region,

fig=fig,

origin_shift="xshift",

fig_height=10,

title="Misfit",

cmap="balance+h0",

hist=True,

cbar_label="mGal",

frame=["nSwE", "xaf10000", "yaf10000"],

)

fig = maps.plot_grd(

grav_grid.reg,

region=inversion_region,

fig=fig,

origin_shift="xshift",

fig_height=10,

title="Regional misfit",

cmap="balance+h0",

hist=True,

cbar_label="mGal",

frame=["nSwE", "xaf10000", "yaf10000"],

)

fig = maps.plot_grd(

grav_grid.res,

region=inversion_region,

fig=fig,

origin_shift="xshift",

fig_height=10,

title="Residual misfit",

cmap="balance+h0",

cpt_lims=[-vd.maxabs(grav_grid.res), vd.maxabs(grav_grid.res)],

hist=True,

cbar_label="mGal",

frame=["nSwE", "xaf10000", "yaf10000"],

points=constraint_points[constraint_points.inside],

points_style="x.2c",

)

fig.show()

[31]:

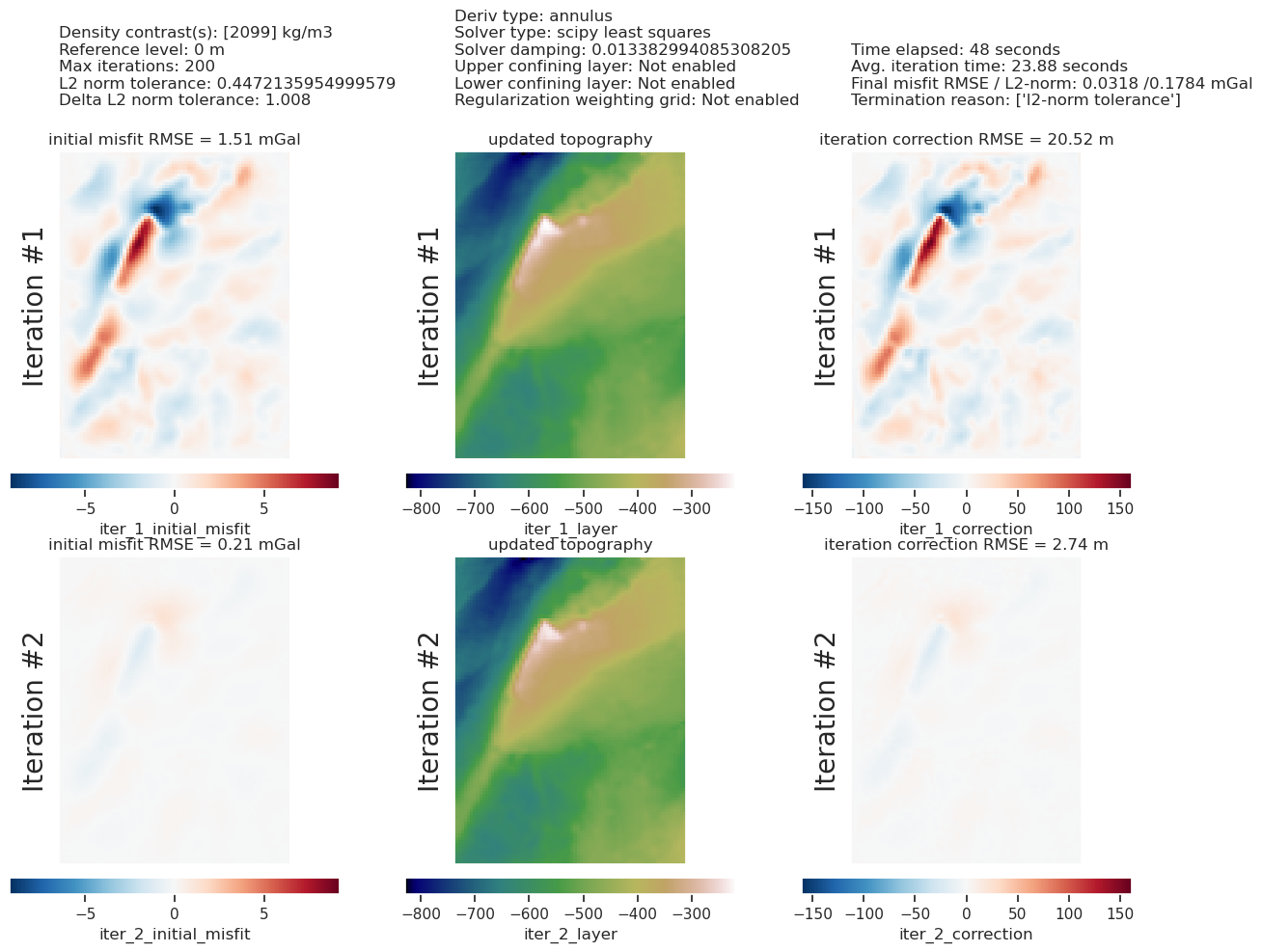

# run the inversion workflow

inversion_results = inversion.run_inversion_workflow(

grav_df=grav_df,

fname=f"{fpath}_optimal",

starting_prisms=starting_prisms,

plot_dynamic_convergence=True,

**kwargs,

)

[32]:

# load saved inversion results

with pathlib.Path(f"{fpath}_optimal_results.pickle").open("rb") as f:

results = pickle.load(f)

# collect the results

topo_results, grav_results, parameters, elapsed_time = results

final_topography = topo_results.set_index(["northing", "easting"]).to_xarray().topo

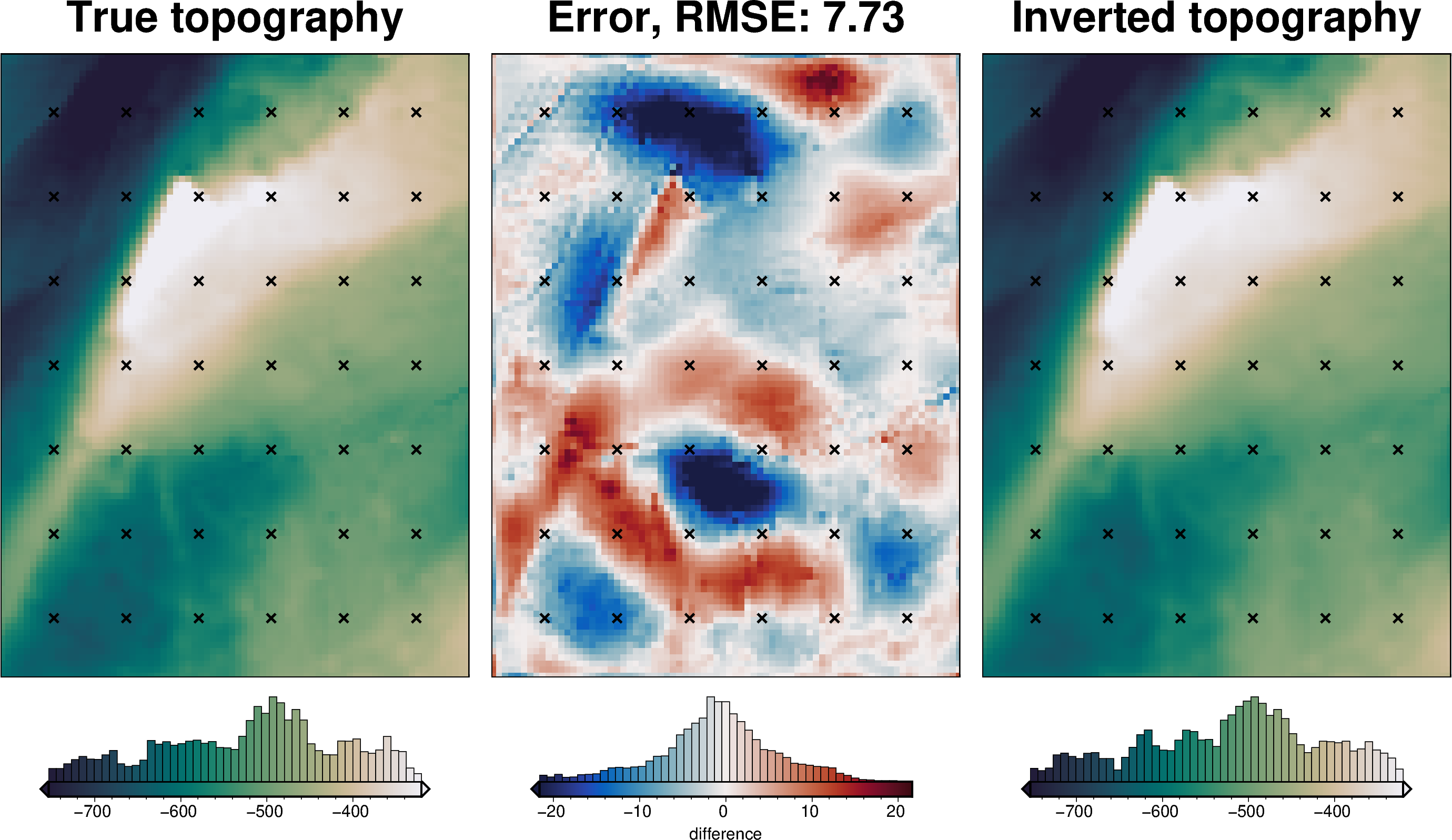

[33]:

_ = polar_utils.grd_compare(

bathymetry,

final_topography,

fig_height=10,

region=inversion_region,

plot=True,

grid1_name="True topography",

grid2_name="Inverted topography",

robust=True,

hist=True,

inset=False,

verbose="q",

title="Error",

grounding_line=False,

reverse_cpt=True,

cmap="rain",

points=constraint_points[constraint_points.inside],

points_style="x.2c",

)

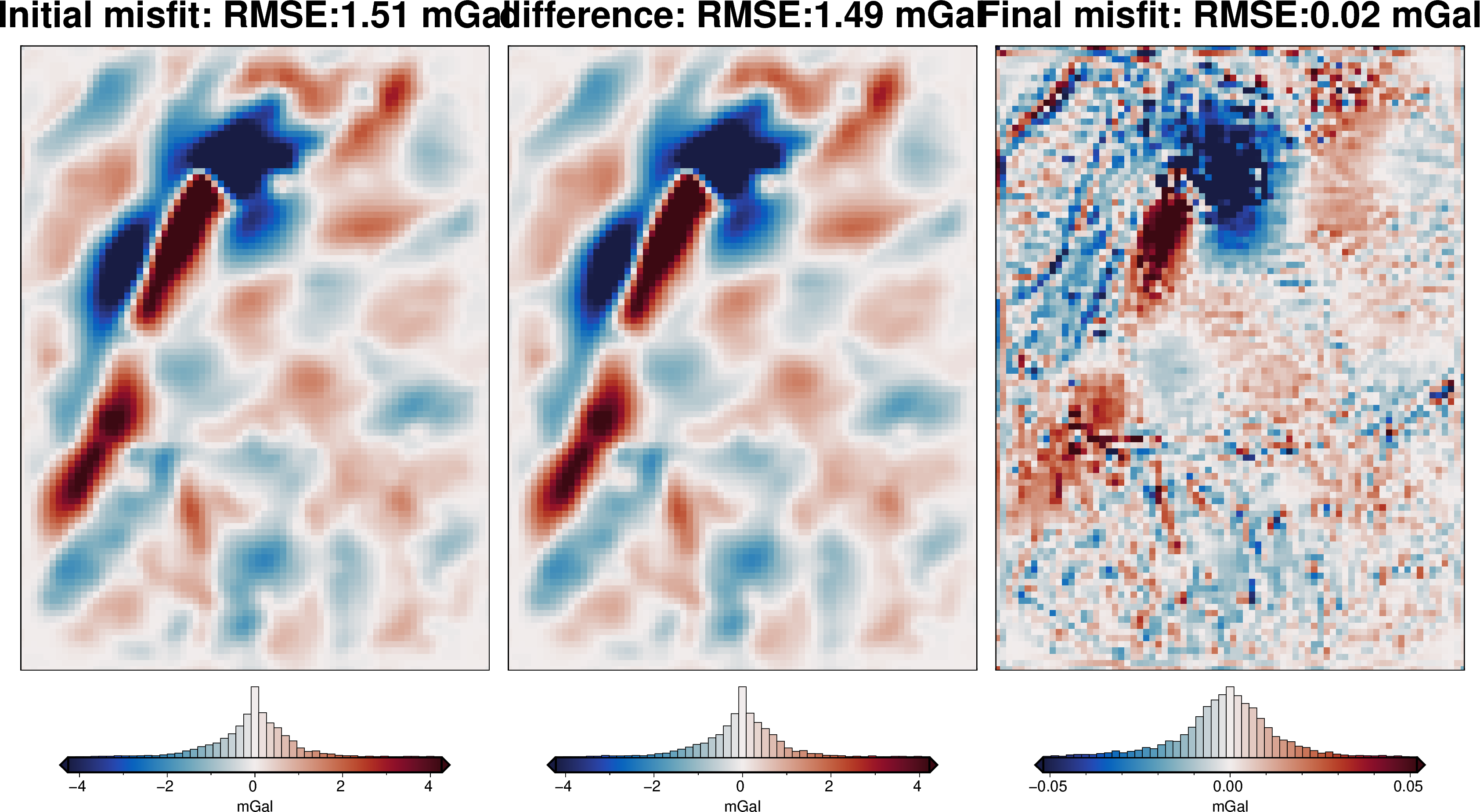

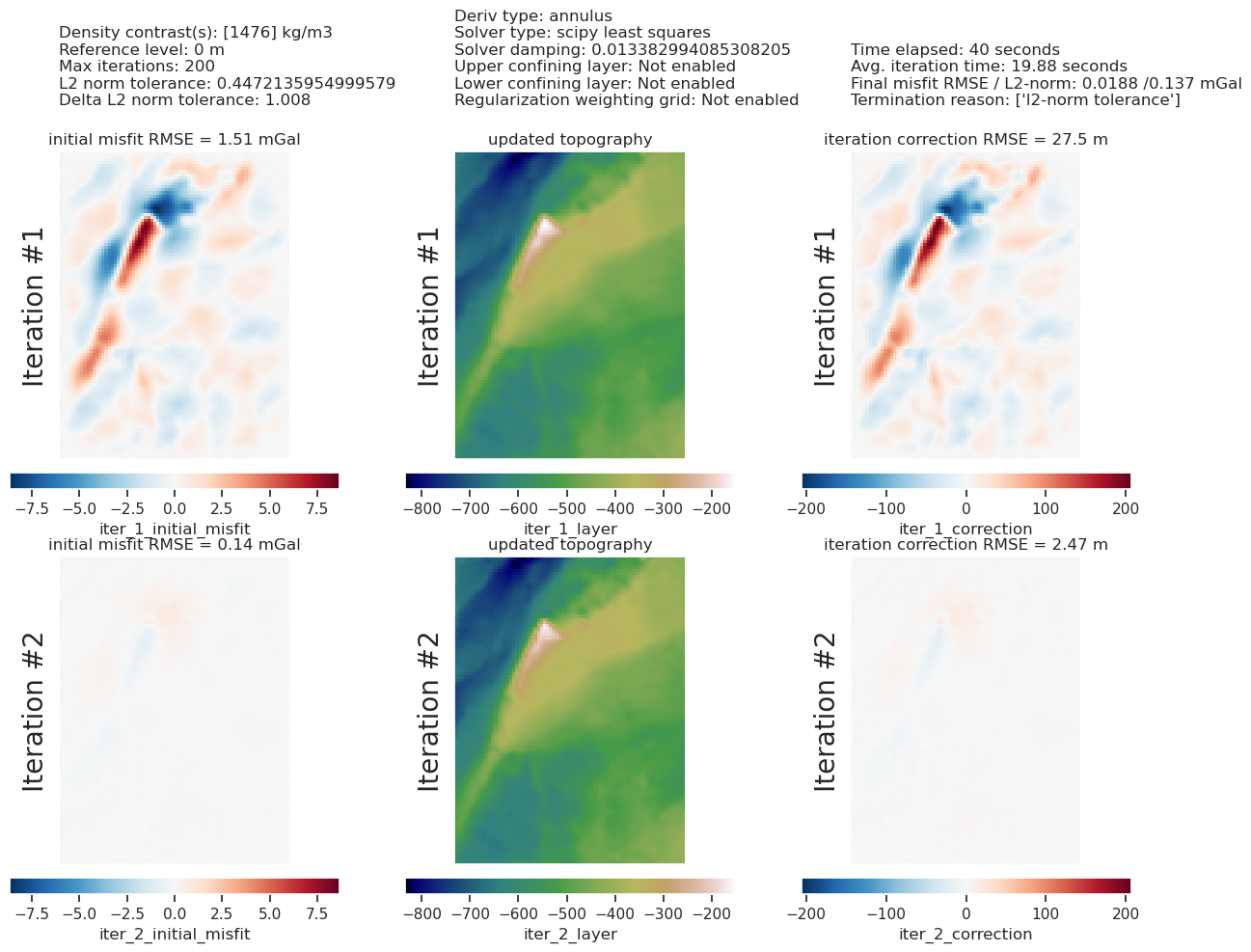

[34]:

plotting.plot_inversion_results(

grav_results,

topo_results,

parameters,

inversion_region,

iters_to_plot=2,

plot_iter_results=True,

plot_topo_results=True,

plot_grav_results=True,

)

[35]:

# sample the inverted topography at the constraint points

constraint_points = utils.sample_grids(

constraint_points,

final_topography,

"inverted_topography",

coord_names=("easting", "northing"),

)

rmse = utils.rmse(constraint_points.upward - constraint_points.inverted_topography)

print(f"RMSE: {rmse:.2f} m")

RMSE: 3.54 m

[36]:

# save to csv

constraint_points.to_csv(f"{fpath}_constraint_points.csv", index=False)

[37]:

constraint_points = pd.read_csv(f"{fpath}_constraint_points.csv")

constraint_points

[37]:

| northing | easting | inside | true_upward | upward | starting_bathymetry | dif | inverted_topography | |

|---|---|---|---|---|---|---|---|---|

| 0 | -1.600000e+06 | -4.000000e+04 | False | -601.093994 | -601.093994 | -601.093994 | 0.000000 | -611.293152 |

| 1 | -1.600000e+06 | -3.800000e+04 | False | -609.216919 | -609.216919 | -609.216919 | 0.000000 | -607.129700 |

| 2 | -1.600000e+06 | -3.600000e+04 | False | -616.355957 | -616.355957 | -616.355957 | 0.000000 | -609.253174 |

| 3 | -1.600000e+06 | -3.400000e+04 | False | -621.262268 | -621.262268 | -621.262268 | 0.000000 | -610.899475 |

| 4 | -1.600000e+06 | -3.200000e+04 | False | -625.510925 | -625.510925 | -625.510925 | 0.000000 | -614.510315 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 881 | -1.418571e+06 | -3.637979e-12 | True | -747.305711 | -747.305711 | -747.289192 | -0.016520 | -733.876385 |

| 882 | -1.418571e+06 | 2.333333e+04 | True | -619.672055 | -619.672055 | -619.459742 | -0.212313 | -597.901453 |

| 883 | -1.418571e+06 | 4.666667e+04 | True | -505.761536 | -505.761536 | -505.739808 | -0.021728 | -497.452503 |

| 884 | -1.418571e+06 | 7.000000e+04 | True | -447.753091 | -447.753091 | -447.782831 | 0.029740 | -456.295524 |

| 885 | -1.418571e+06 | 9.333333e+04 | True | -395.004206 | -395.004206 | -395.079663 | 0.075456 | -387.897039 |

886 rows × 8 columns

Redo regional separation with true density¶

[38]:

density_grid = xr.where(

starting_bathymetry >= zref,

true_density_contrast,

-true_density_contrast,

)

# create layer of prisms

starting_prisms_true = utils.grids_to_prisms(

starting_bathymetry,

zref,

density=density_grid,

)

grav_df_true_density = grav_df.copy()

grav_df_true_density["starting_gravity"] = starting_prisms_true.prism_layer.gravity(

coordinates=(

grav_df_true_density.easting,

grav_df_true_density.northing,

grav_df_true_density.upward,

),

field="g_z",

progressbar=True,

)

# temporarily set some kwargs

true_density_reg_kwargs = copy.deepcopy(regional_grav_kwargs)

true_density_reg_kwargs["cv_kwargs"]["fname"] = (

"../tmp_outputs/Ross_Sea_04_regional_sep_true_density"

)

true_density_reg_kwargs["cv_kwargs"]["progressbar"] = True

grav_df_true_density = regional.regional_separation(

grav_df=grav_df_true_density,

**true_density_reg_kwargs,

)

regional_comparison(grav_df_true_density)

[39]:

# run the inversion workflow

inversion_results = inversion.run_inversion_workflow(

grav_df=grav_df_true_density,

fname=f"{fpath}_true_density",

starting_prisms=starting_prisms_true,

plot_dynamic_convergence=True,

**kwargs,

)

[40]:

# load saved inversion results

with pathlib.Path(f"{fpath}_true_density_results.pickle").open("rb") as f:

inversion_results = pickle.load(f)

# collect the results

topo_results, grav_results, parameters, elapsed_time = inversion_results

final_topography = topo_results.set_index(["northing", "easting"]).to_xarray().topo

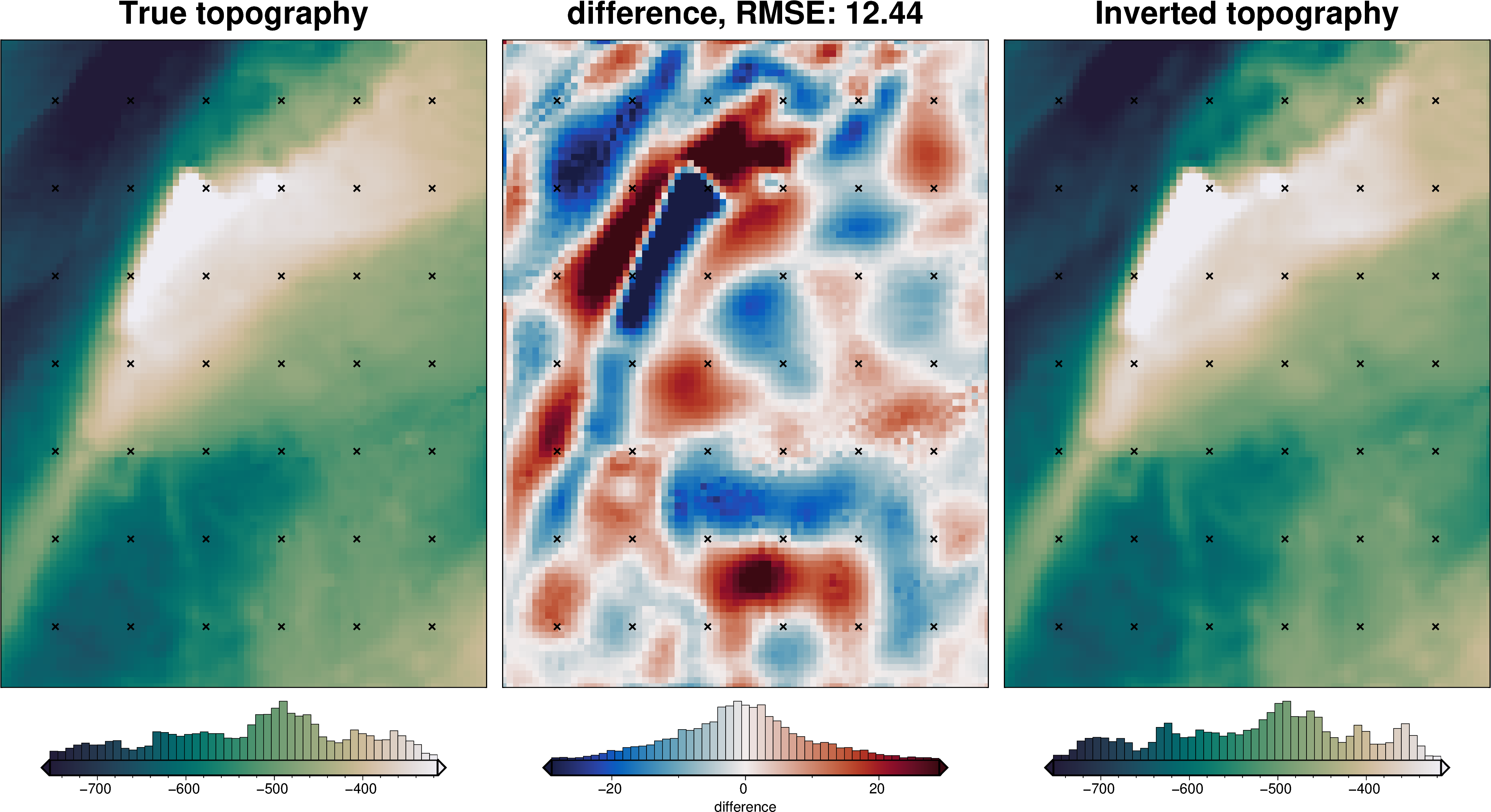

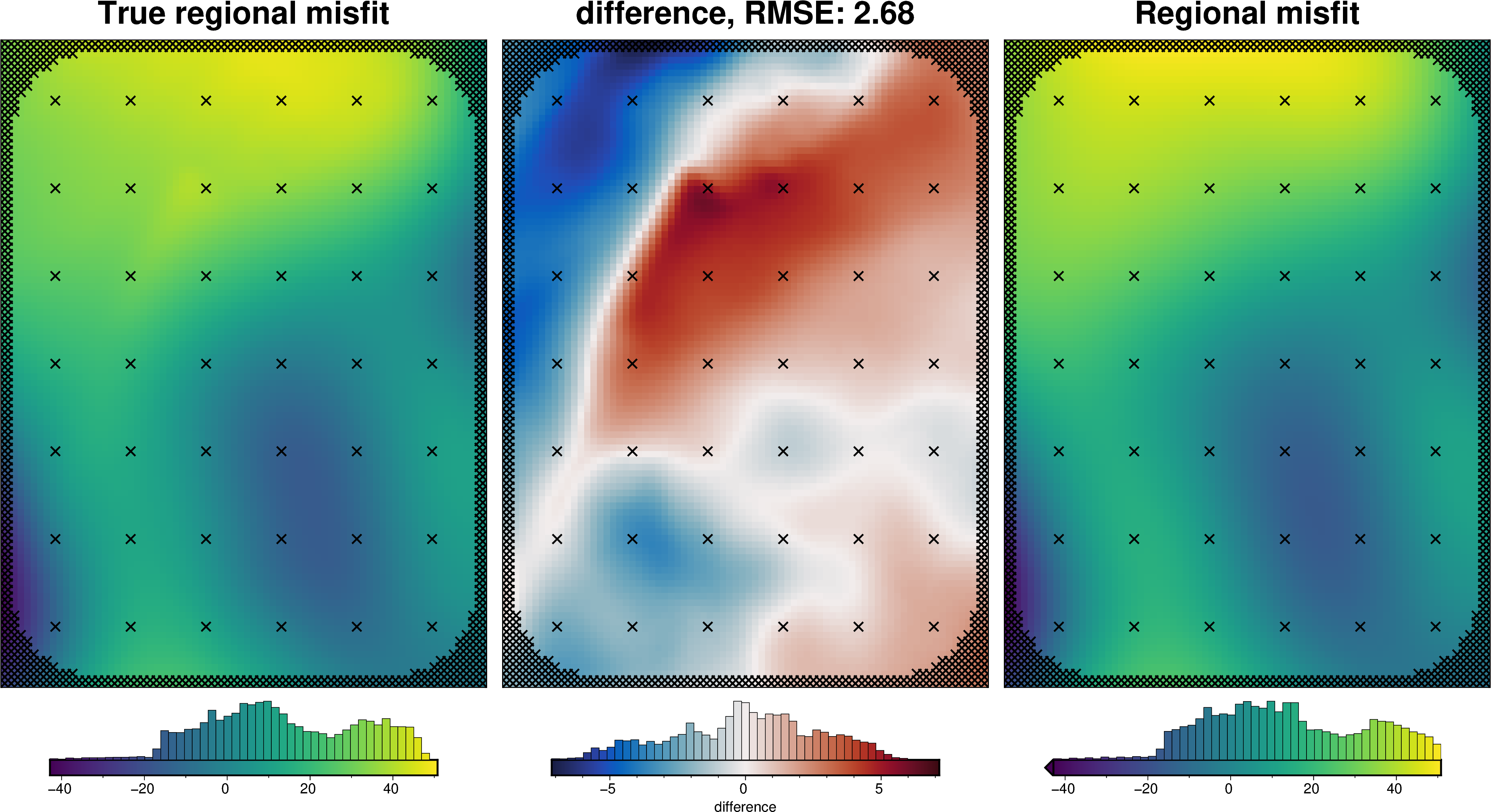

[41]:

plotting.plot_inversion_results(

grav_results,

topo_results,

parameters,

inversion_region,

iters_to_plot=2,

plot_iter_results=True,

plot_topo_results=True,

plot_grav_results=True,

)

_ = polar_utils.grd_compare(

bathymetry,

final_topography,

region=inversion_region,

plot=True,

grid1_name="True topography",

grid2_name="Inverted topography",

robust=True,

hist=True,

inset=False,

verbose="q",

title="difference",

grounding_line=False,

reverse_cpt=True,

cmap="rain",

points=constraint_points[constraint_points.inside],

points_style="x.2c",

)

[ ]:

# re-load the study from the saved pickle file

with pathlib.Path(

f"{true_density_reg_kwargs.get('cv_kwargs').get('fname')}.pickle"

).open("rb") as f:

study = pickle.load(f)

reg_eq_depth = min(study.best_trials, key=lambda t: t.values[0]).params["depth"] # noqa: PD011

reg_eq_damping = None

new_true_density_reg_kwargs = dict(

method="constraints",

grid_method="eq_sources",

constraints_df=constraint_points,

damping=reg_eq_damping,

depth=reg_eq_depth,

block_size=None,

)

reg_eq_depth, reg_eq_damping

(214284.78608580318, None)

[43]:

grav_df_true_density

[43]:

| northing | easting | upward | bathymetry_grav | basement_grav_normalized | basement_grav | gravity_anomaly | starting_gravity | true_res | misfit | reg | res | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -1600000.0 | -40000.0 | 1000.0 | -35.551054 | -0.575610 | -36.298772 | -71.849826 | -35.578561 | -3.009687 | -36.271265 | -36.352514 | 0.081249 |

| 1 | -1600000.0 | -38000.0 | 1000.0 | -36.054657 | -0.523194 | -33.940048 | -69.994704 | -36.042642 | -3.088826 | -33.952063 | -33.935629 | -0.016434 |

| 2 | -1600000.0 | -36000.0 | 1000.0 | -36.473146 | -0.466341 | -31.381660 | -67.854806 | -36.460095 | -3.125498 | -31.394711 | -31.343652 | -0.051059 |

| 3 | -1600000.0 | -34000.0 | 1000.0 | -36.755608 | -0.406001 | -28.666395 | -65.422003 | -36.784649 | -3.111113 | -28.637354 | -28.615142 | -0.022212 |

| 4 | -1600000.0 | -32000.0 | 1000.0 | -36.951029 | -0.343146 | -25.837899 | -62.788928 | -36.998469 | -3.110966 | -25.790460 | -25.788302 | -0.002158 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 7671 | -1400000.0 | 102000.0 | 1000.0 | -25.760090 | 0.399165 | 7.566093 | -18.193997 | -25.498180 | -2.438584 | 7.304183 | 7.321185 | -0.017002 |

| 7672 | -1400000.0 | 104000.0 | 1000.0 | -25.911429 | 0.334795 | 4.669468 | -21.241961 | -25.673780 | -2.429314 | 4.431819 | 4.418937 | 0.012882 |

| 7673 | -1400000.0 | 106000.0 | 1000.0 | -26.032814 | 0.268739 | 1.696911 | -24.335902 | -25.808792 | -2.427211 | 1.472890 | 1.429781 | 0.043108 |

| 7674 | -1400000.0 | 108000.0 | 1000.0 | -26.121903 | 0.201713 | -1.319231 | -27.441134 | -25.904533 | -2.428732 | -1.536601 | -1.582007 | 0.045406 |

| 7675 | -1400000.0 | 110000.0 | 1000.0 | -26.206160 | 0.134416 | -4.347629 | -30.553790 | -25.981733 | -2.442380 | -4.572057 | -4.536543 | -0.035514 |

7676 rows × 12 columns

[44]:

regional_misfit_parameter_dict = {

"depth": {

"distribution": "normal",

"loc": reg_eq_depth, # mean

"scale": reg_eq_depth / 4, # standard deviation

},

}

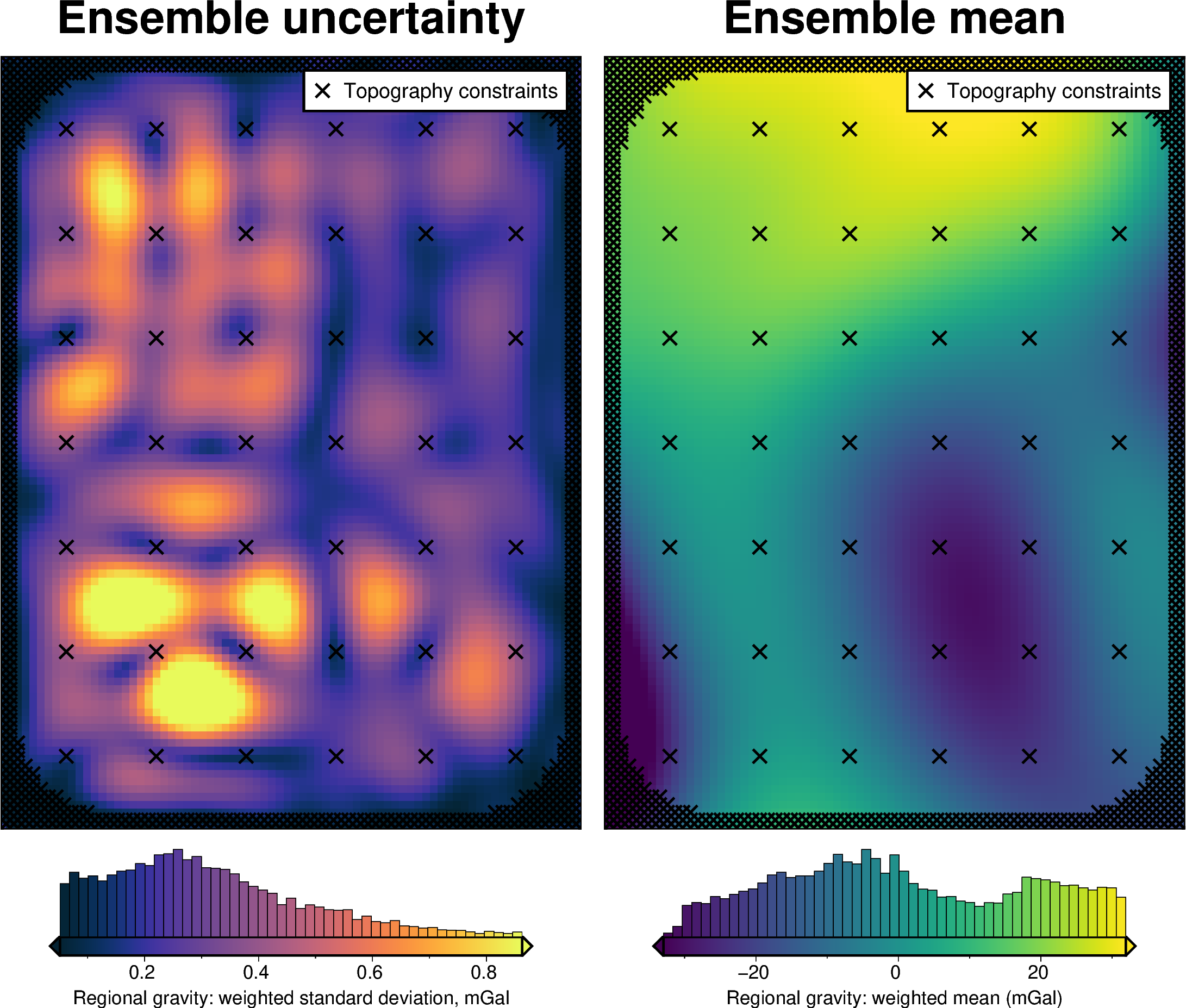

regional_misfit_stats, _ = uncertainty.regional_misfit_uncertainty(

runs=20,

parameter_dict=regional_misfit_parameter_dict,

plot_region=inversion_region,

true_regional=grav_df_true_density.set_index(["northing", "easting"])

.to_xarray()

.basement_grav,

weight_by="constraints",

# weight_by=None,

grav_df=grav_df_true_density,

**new_true_density_reg_kwargs,

)

[45]:

grav_grid = grav_df_true_density.set_index(["northing", "easting"]).to_xarray()

grav_grid["reg_uncert"] = regional_misfit_stats.z_stdev

grav_df_true_density = vd.grid_to_table(grav_grid)

grav_df_true_density

[45]:

| northing | easting | upward | bathymetry_grav | basement_grav_normalized | basement_grav | gravity_anomaly | starting_gravity | true_res | misfit | reg | res | reg_uncert | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -1600000.0 | -40000.0 | 1000.0 | -35.551054 | -0.575610 | -36.298772 | -71.849826 | -35.578561 | -3.009687 | -36.271265 | -36.352514 | 0.081249 | 0.196374 |

| 1 | -1600000.0 | -38000.0 | 1000.0 | -36.054657 | -0.523194 | -33.940048 | -69.994704 | -36.042642 | -3.088826 | -33.952063 | -33.935629 | -0.016434 | 0.082311 |

| 2 | -1600000.0 | -36000.0 | 1000.0 | -36.473146 | -0.466341 | -31.381660 | -67.854806 | -36.460095 | -3.125498 | -31.394711 | -31.343652 | -0.051059 | 0.058318 |

| 3 | -1600000.0 | -34000.0 | 1000.0 | -36.755608 | -0.406001 | -28.666395 | -65.422003 | -36.784649 | -3.111113 | -28.637354 | -28.615142 | -0.022212 | 0.076759 |

| 4 | -1600000.0 | -32000.0 | 1000.0 | -36.951029 | -0.343146 | -25.837899 | -62.788928 | -36.998469 | -3.110966 | -25.790460 | -25.788302 | -0.002158 | 0.084586 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 7671 | -1400000.0 | 102000.0 | 1000.0 | -25.760090 | 0.399165 | 7.566093 | -18.193997 | -25.498180 | -2.438584 | 7.304183 | 7.321185 | -0.017002 | 0.182931 |

| 7672 | -1400000.0 | 104000.0 | 1000.0 | -25.911429 | 0.334795 | 4.669468 | -21.241961 | -25.673780 | -2.429314 | 4.431819 | 4.418937 | 0.012882 | 0.197329 |

| 7673 | -1400000.0 | 106000.0 | 1000.0 | -26.032814 | 0.268739 | 1.696911 | -24.335902 | -25.808792 | -2.427211 | 1.472890 | 1.429781 | 0.043108 | 0.171544 |

| 7674 | -1400000.0 | 108000.0 | 1000.0 | -26.121903 | 0.201713 | -1.319231 | -27.441134 | -25.904533 | -2.428732 | -1.536601 | -1.582007 | 0.045406 | 0.089063 |

| 7675 | -1400000.0 | 110000.0 | 1000.0 | -26.206160 | 0.134416 | -4.347629 | -30.553790 | -25.981733 | -2.442380 | -4.572057 | -4.536543 | -0.035514 | 0.104078 |

7676 rows × 13 columns

[46]:

grav_df_true_density.to_csv(f"{fpath}_grav_df_true_density.csv", index=False)

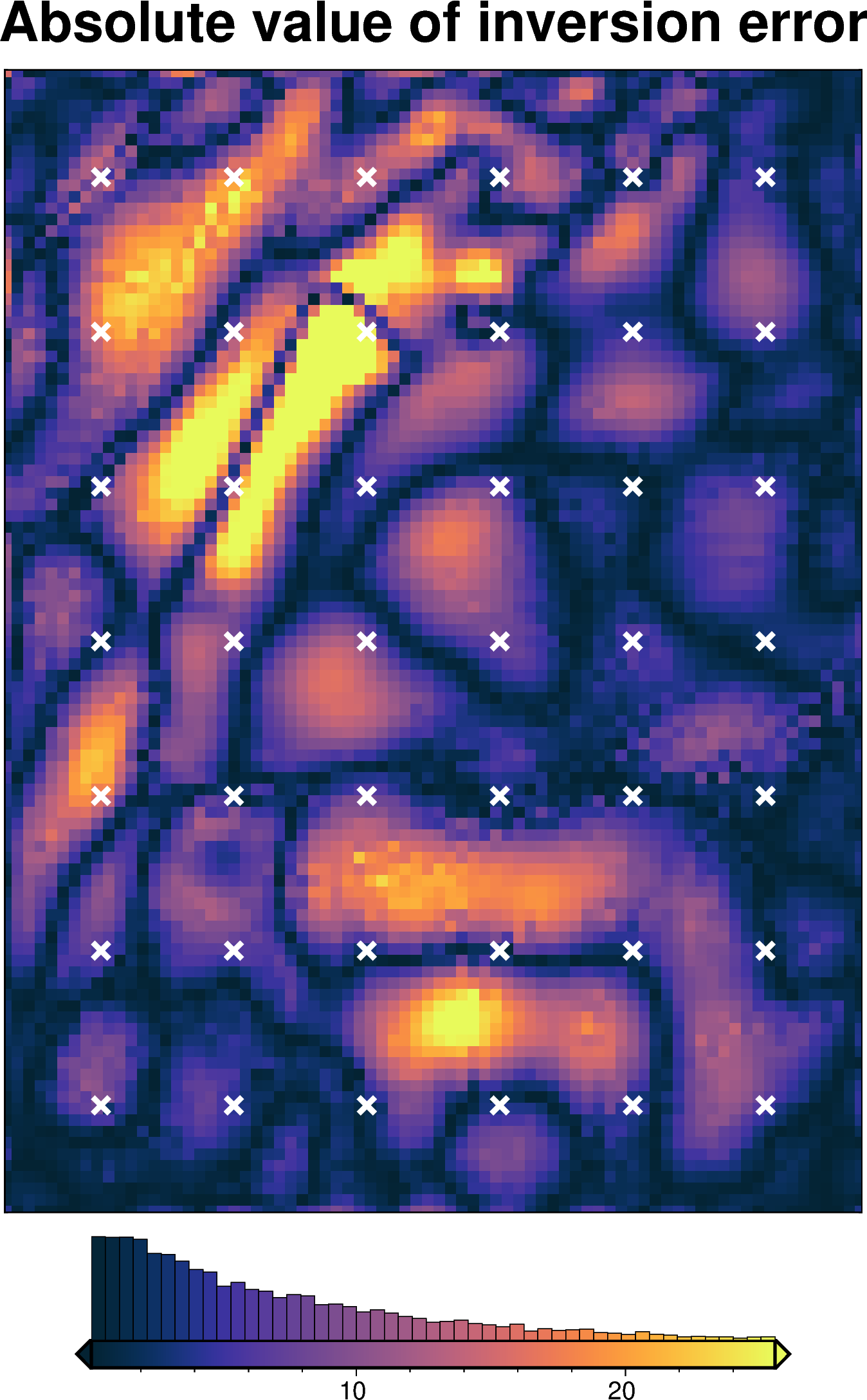

Uncertainty analysis¶

Inversion error¶

[47]:

inversion_error = np.abs(bathymetry - final_topography)

fig = maps.plot_grd(

inversion_error,

region=inversion_region,

hist=True,

cmap="thermal",

title="Absolute value of inversion error",

robust=True,

points=constraint_points[constraint_points.inside],

points_style="x.3c",

points_fill="white",

points_pen="2p",

)

fig.show()

[ ]:

# re-load the study from the saved pickle file

with pathlib.Path(f"{regional_grav_kwargs.get('cv_kwargs').get('fname')}.pickle").open(

"rb"

) as f:

study = pickle.load(f)

reg_eq_depth = min(study.best_trials, key=lambda t: t.values[0]).params["depth"] # noqa: PD011

reg_eq_damping = None

new_regional_grav_kwargs = dict(

method="constraints",

grid_method="eq_sources",

constraints_df=constraint_points,

damping=reg_eq_damping,

depth=reg_eq_depth,

block_size=None,

)

reg_eq_depth, reg_eq_damping

(280813.97890601715, None)

[49]:

# kwargs to reuse for all uncertainty analyses

uncert_kwargs = dict(

grav_df=grav_df,

density_contrast=best_density_contrast,

zref=zref,

starting_prisms=starting_prisms,

starting_topography=starting_bathymetry,

regional_grav_kwargs=new_regional_grav_kwargs,

**kwargs,

)

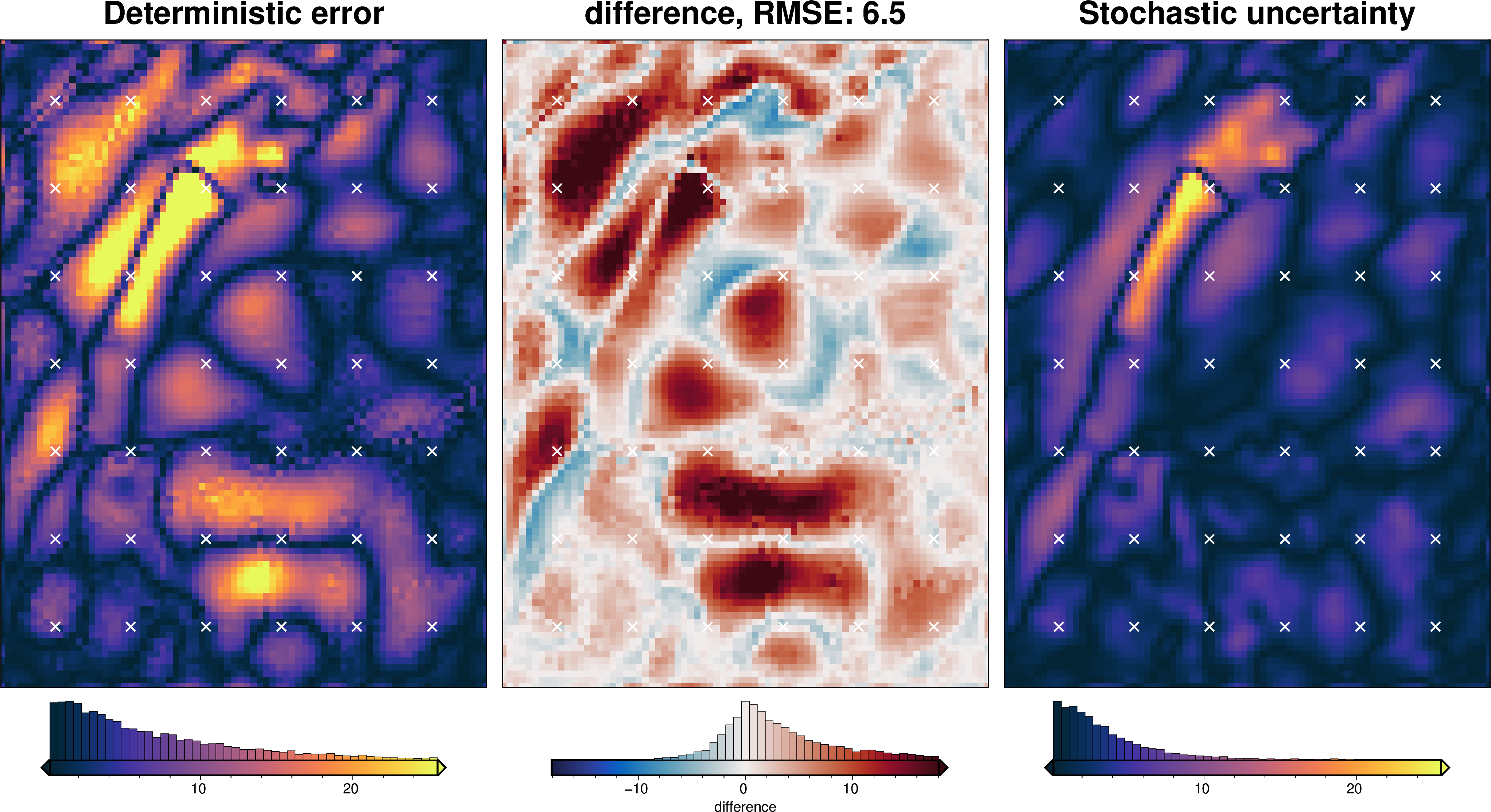

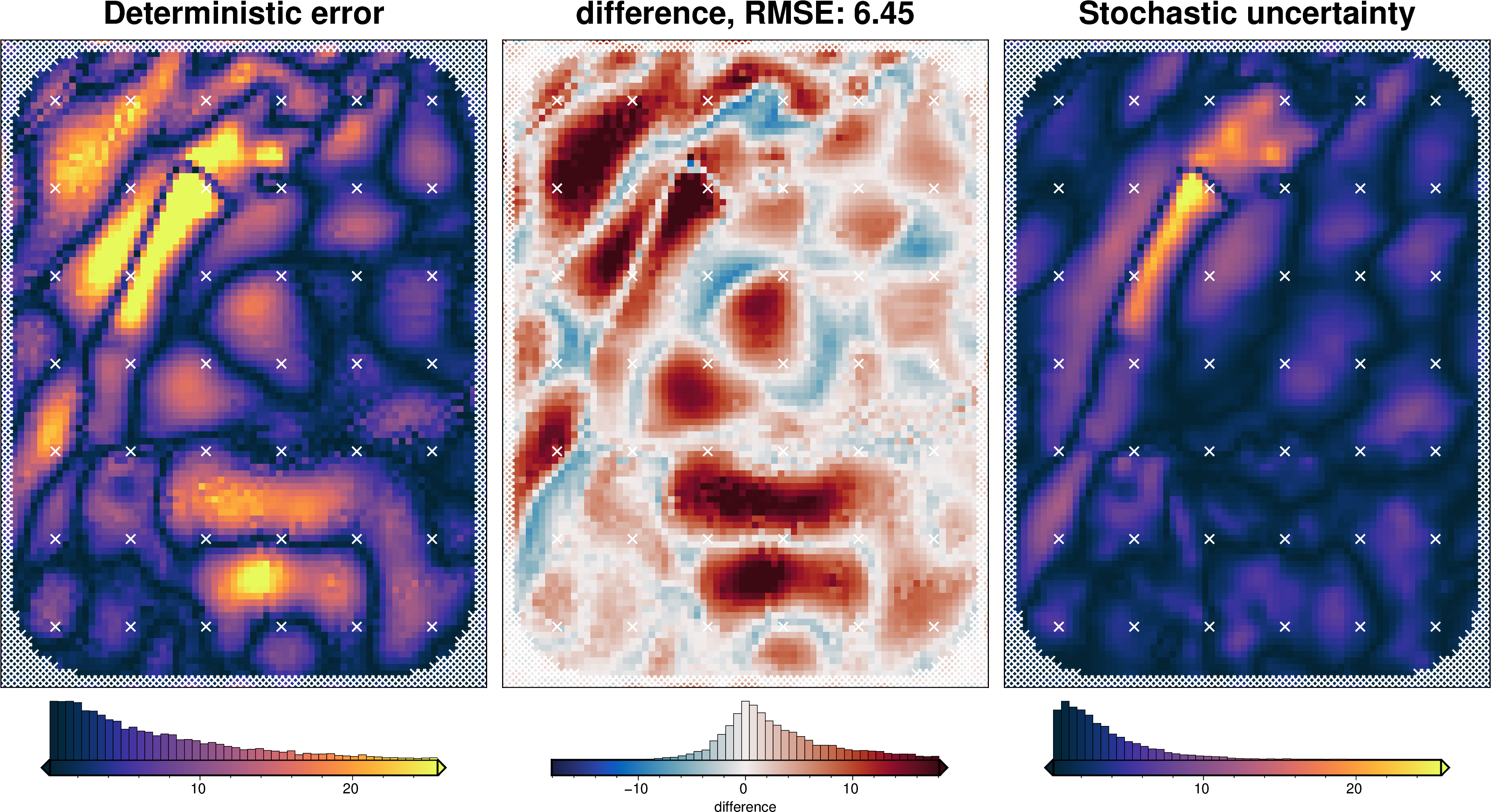

Solver damping component¶

[50]:

# load study

with pathlib.Path(f"{fpath}_damping_cv_damping_cv_study.pickle").open("rb") as f:

study = pickle.load(f)

study_df = study.trials_dataframe().drop(columns=["user_attrs_results"])

study_df = study_df.sort_values("value")

# calculate zscores of values

study_df["value_zscore"] = sp.stats.zscore(study_df["value"])

# drop outliers (values with zscore > |2|)

study_df2 = study_df[(np.abs(study_df.value_zscore) < 2)]

# pick damping standard deviation based on optimization

stdev = np.log10(study_df2.params_damping).std()

print(f"calculated stdev: {stdev}")

stdev = stdev / 2

print(f"using stdev: {stdev}")

calculated stdev: 0.4825312008020997

using stdev: 0.24126560040104986

[51]:

fig = plotting.plot_cv_scores(

study_df.value,

study_df.params_damping,

param_name="Damping",

logx=True,

logy=True,

)

ax = fig.axes[0]

best = float(study_df2.params_damping.iloc[0])

upper = float(10 ** (np.log10(best) + stdev))

lower = float(10 ** (np.log10(best) - stdev))

y_lims = ax.get_ylim()

ax.vlines(best, ymin=y_lims[0], ymax=y_lims[1], color="r")

ax.vlines(upper, ymin=y_lims[0], ymax=y_lims[1], label="+/- std")

ax.vlines(lower, ymin=y_lims[0], ymax=y_lims[1])

x_lims = ax.get_xlim()

ax.set_xlim(

min(x_lims[0], lower),

max(x_lims[1], upper),

)

ax.legend()

print("best:", best, "\nstd:", stdev, "\n+1std:", upper, "\n-1std:", lower)

best: 0.013382994085308205

std: 0.24126560040104986

+1std: 0.023324851444967345

-1std: 0.007678699738344471

[52]:

solver_dict = {

"solver_damping": {

"distribution": "normal",

"loc": np.log10(best_damping), # mean of base 10 exponent

"scale": stdev, # 0.2, # standard deviation of base 10 exponent

"log": True,

},

}

fname = f"{fpath}_uncertainty_damping"

# delete files if already exist

for p in pathlib.Path().glob(f"{fname}*"):

p.unlink(missing_ok=True)

uncert_damping_results = uncertainty.full_workflow_uncertainty_loop(

fname=fname,

runs=10,

parameter_dict=solver_dict,

**uncert_kwargs,

)

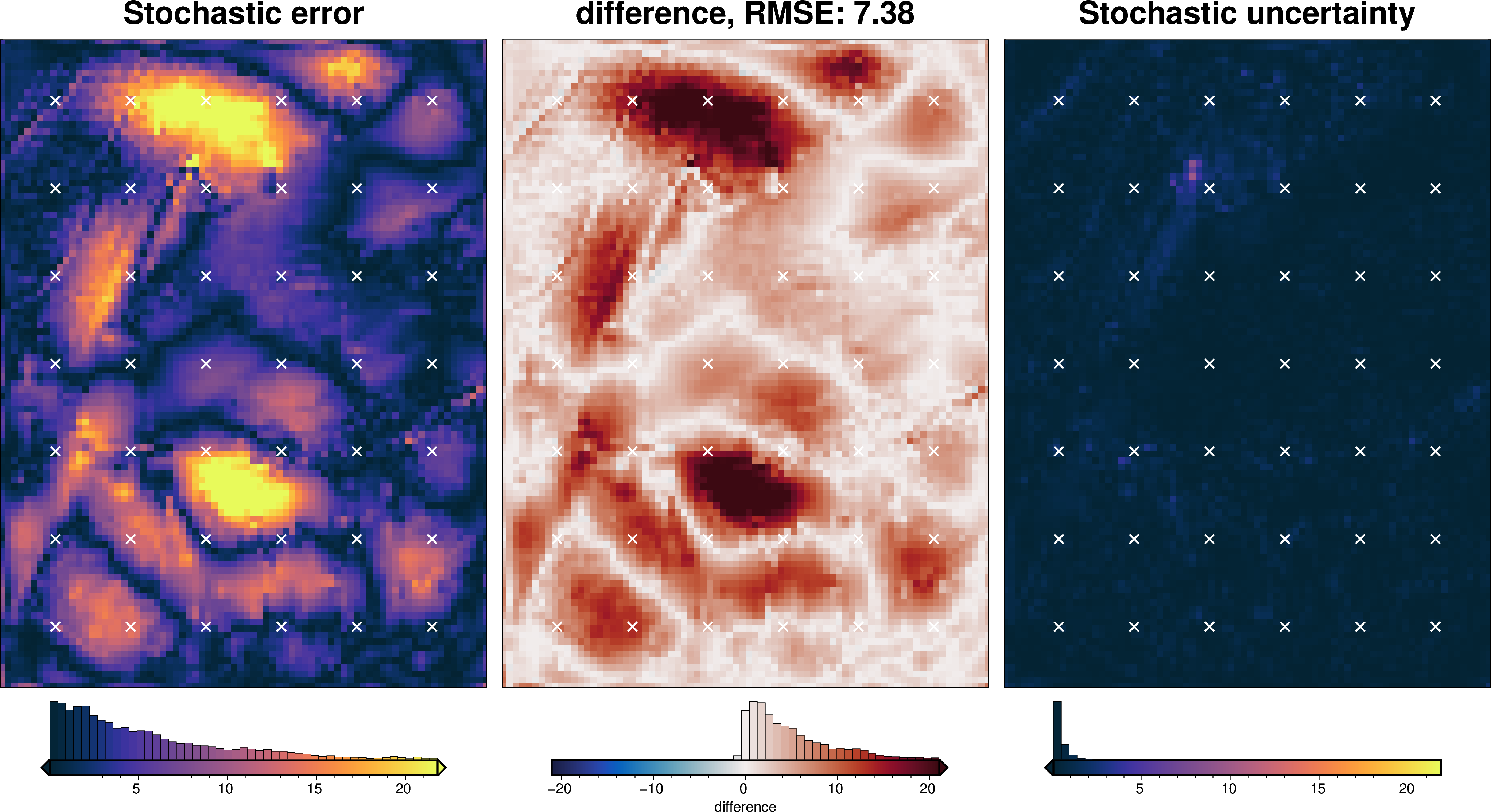

stats_ds = synth_plotting.uncert_plots(

uncert_damping_results,

inversion_region,

bathymetry,

deterministic_bathymetry=final_topography,

constraint_points=constraint_points[constraint_points.inside],

weight_by="constraints",

)

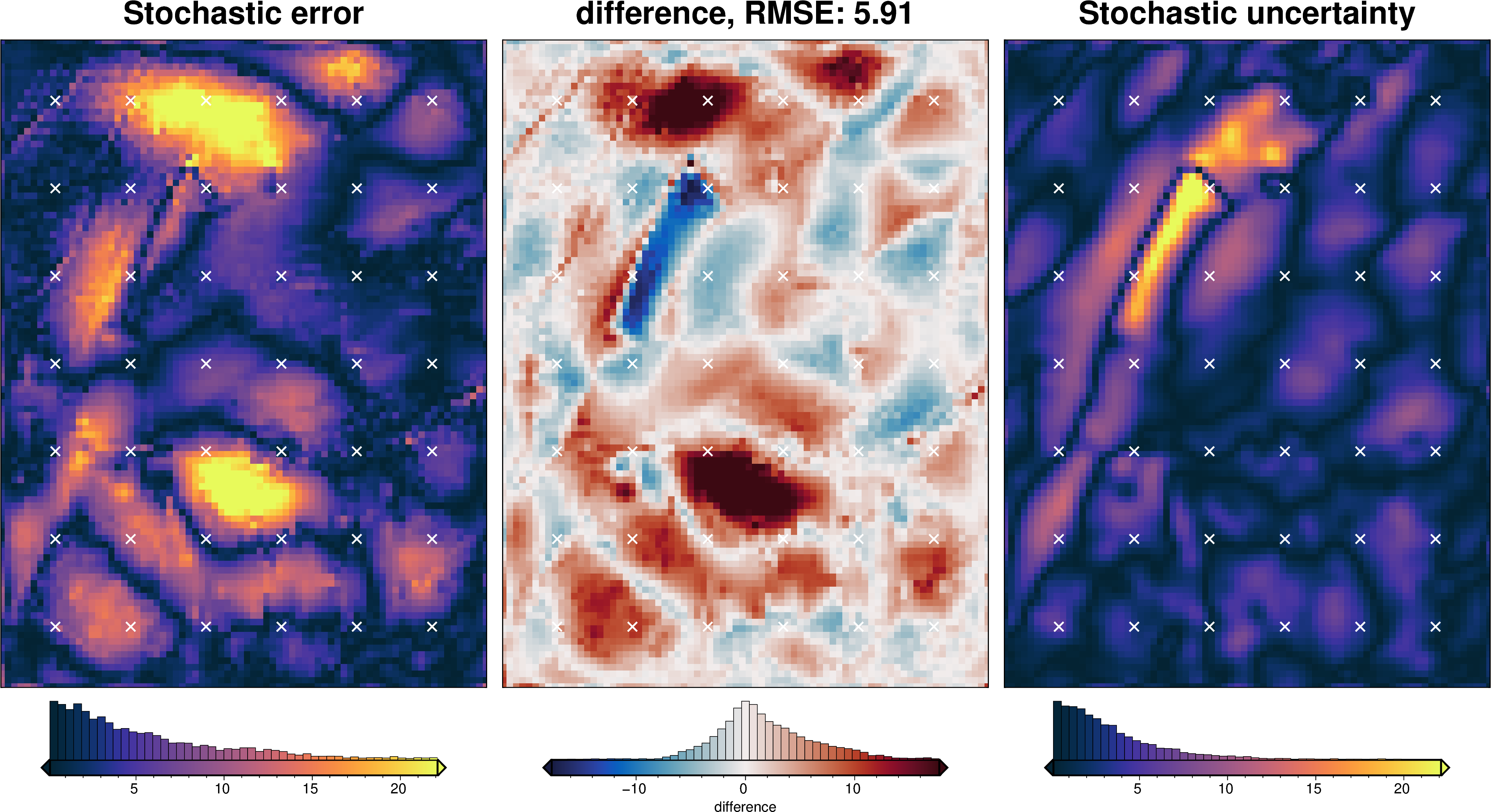

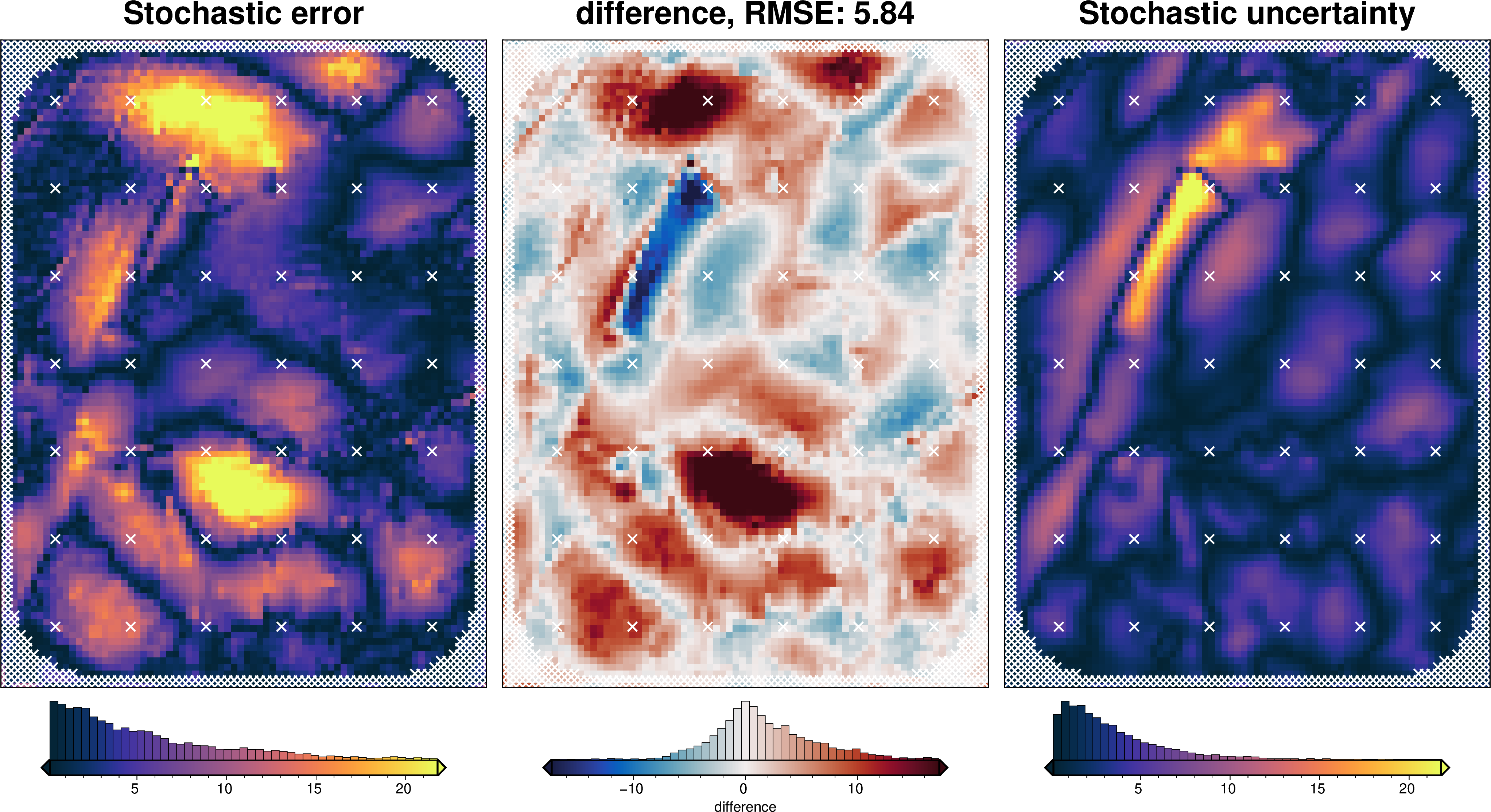

Density component¶

[53]:

# load study

with pathlib.Path(f"{fpath}_density_cv_kfolds_study.pickle").open("rb") as f:

study = pickle.load(f)

study_df = study.trials_dataframe()

study_df = study_df.sort_values("value")

# calculate zscores of values

study_df["value_zscore"] = sp.stats.zscore(study_df["value"])

# drop outliers (values with zscore > |2|)

study_df2 = study_df[(np.abs(study_df.value_zscore) < 2)]

stdev = study_df2.params_density_contrast.std()

print(f"calculated stdev: {stdev}")

# manually pick a stdev

stdev = 500

print(f"using stdev: {stdev}")

print(

f"density estimation error: {np.abs(true_density_contrast - best_density_contrast)}"

)

calculated stdev: 207.87211977996995

using stdev: 500

density estimation error: 623

[ ]:

fig = plotting.plot_cv_scores(

study.trials_dataframe().value.to_numpy(),

study.trials_dataframe().params_density_contrast.values,

param_name="Density",

logx=False,

logy=False,

)

ax = fig.axes[0]

best = study_df2.params_density_contrast.iloc[0]

upper = best + stdev

lower = best - stdev

y_lims = ax.get_ylim()

ax.vlines(best, ymin=y_lims[0], ymax=y_lims[1], color="r")

ax.vlines(upper, ymin=y_lims[0], ymax=y_lims[1], label="+/- std")

ax.vlines(lower, ymin=y_lims[0], ymax=y_lims[1])

x_lims = ax.get_xlim()

ax.set_xlim(

min(x_lims[0], lower),

max(x_lims[1], upper),

)

ax.legend()

print("best:", best, "\nstd:", stdev, "\n+1std:", upper, "\n-1std:", lower)

best: 2099

std: 500

+1std: 2599

-1std: 1599

[55]:

density_dict = {

"density_contrast": {

"distribution": "normal",

"loc": best_density_contrast,

"scale": stdev,

},

}

fname = f"{fpath}_uncertainty_density"

# delete files if already exist

for p in pathlib.Path().glob(f"{fname}*"):

p.unlink(missing_ok=True)

uncert_density_results = uncertainty.full_workflow_uncertainty_loop(

fname=fname,

runs=10,

parameter_dict=density_dict,

**uncert_kwargs,

)

stats_ds = synth_plotting.uncert_plots(

uncert_density_results,

inversion_region,

bathymetry,

deterministic_bathymetry=final_topography,

constraint_points=constraint_points[constraint_points.inside],

weight_by="constraints",

)

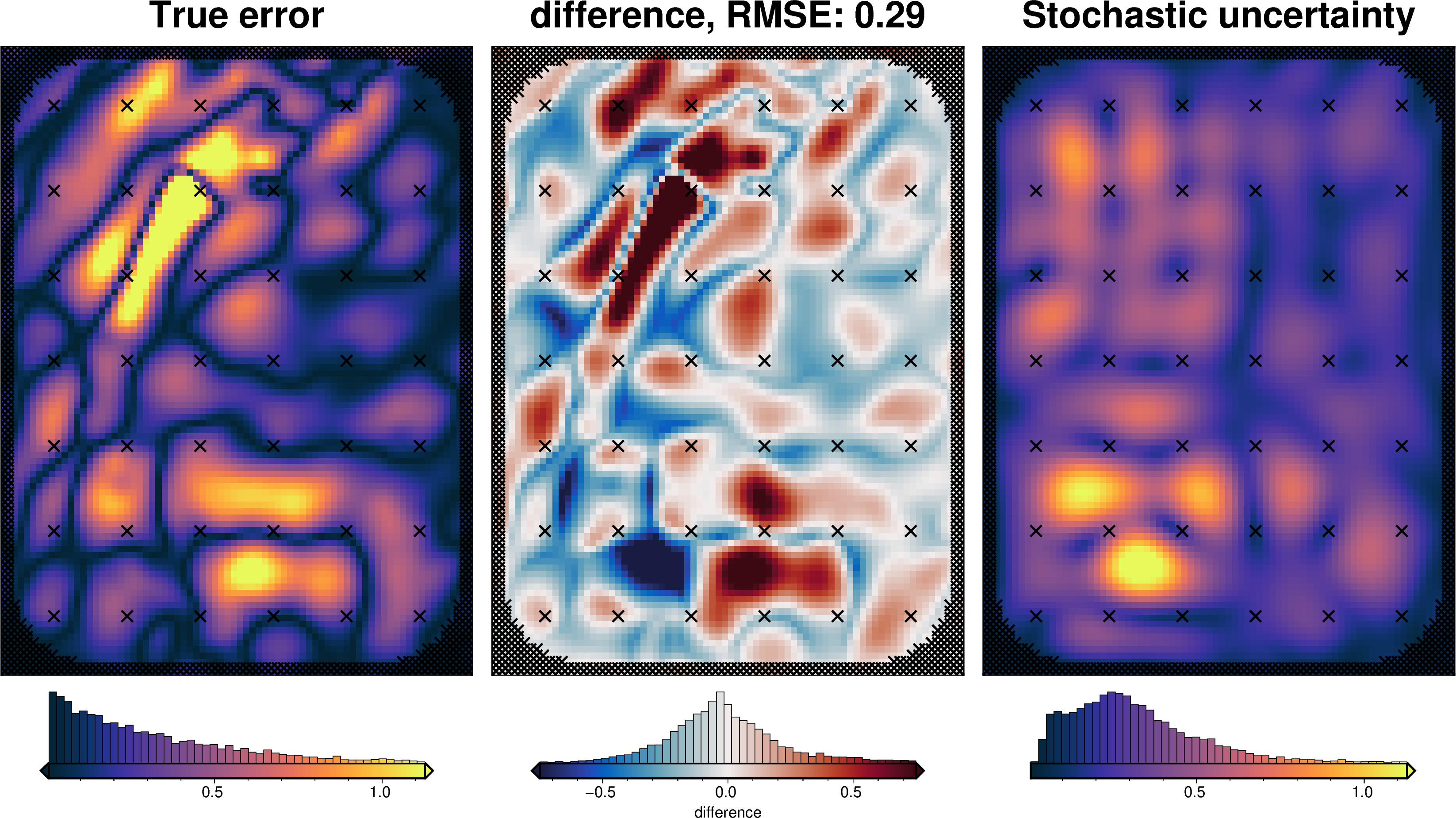

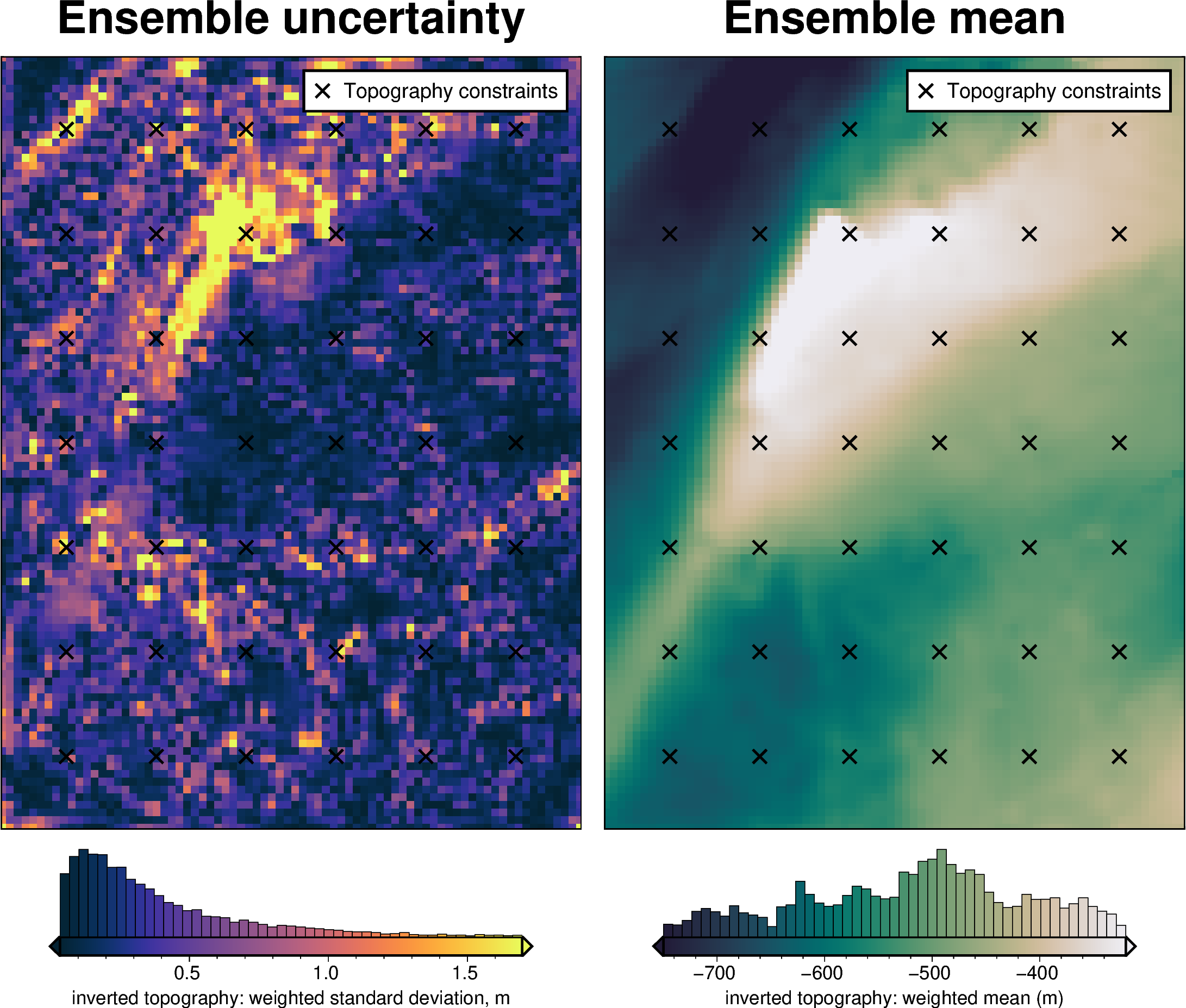

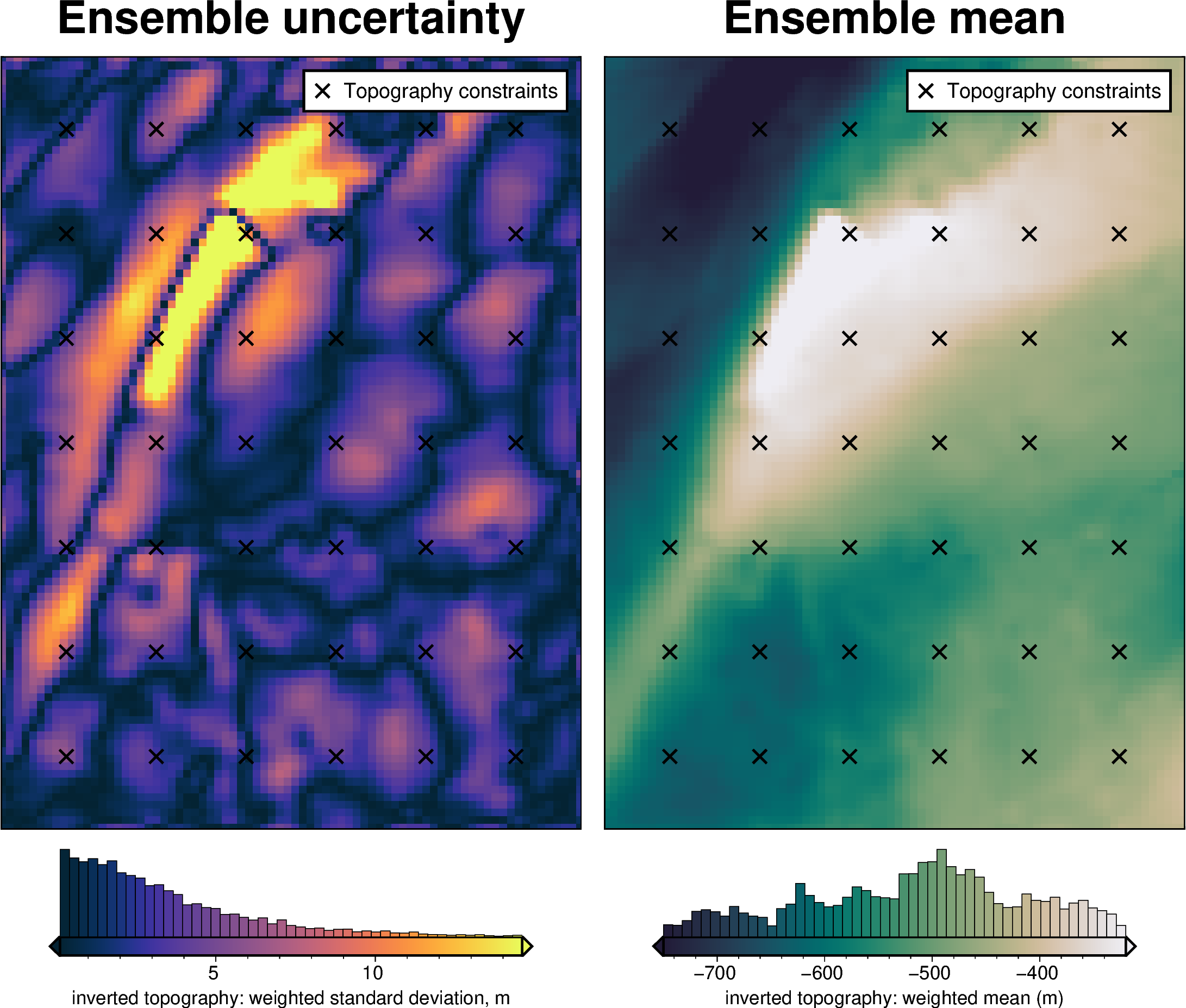

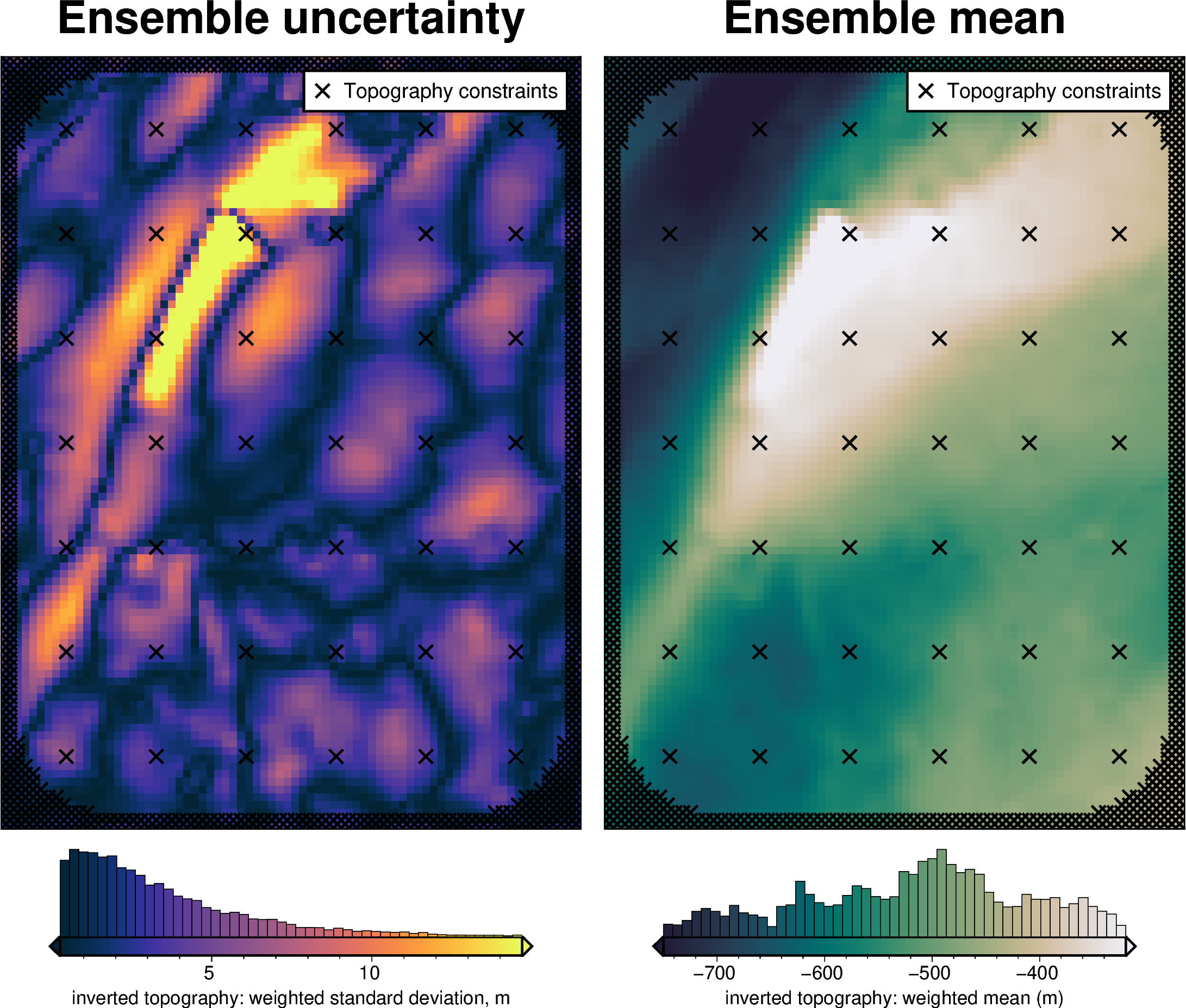

Total uncertainty¶

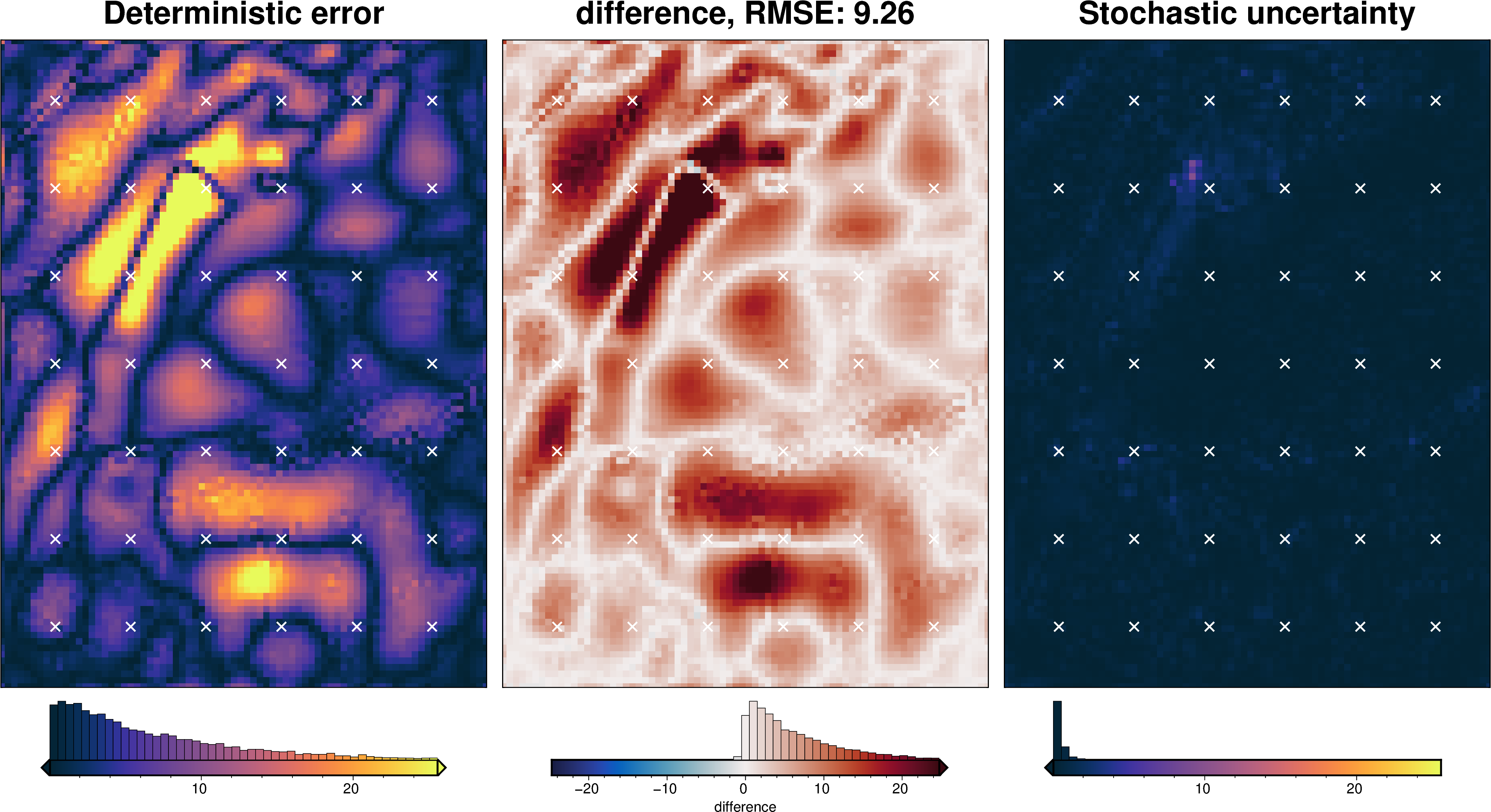

[56]:

fname = f"{fpath}_uncertainty_full"

# delete files if already exist

for p in pathlib.Path().glob(f"{fname}*"):

p.unlink(missing_ok=True)

uncert_results = uncertainty.full_workflow_uncertainty_loop(

fname=fname,

runs=20,

parameter_dict=solver_dict | density_dict,

**uncert_kwargs,

)

stats_ds = synth_plotting.uncert_plots(

uncert_results,

inversion_region,

bathymetry,

deterministic_bathymetry=final_topography,

constraint_points=constraint_points,

weight_by="constraints",

)

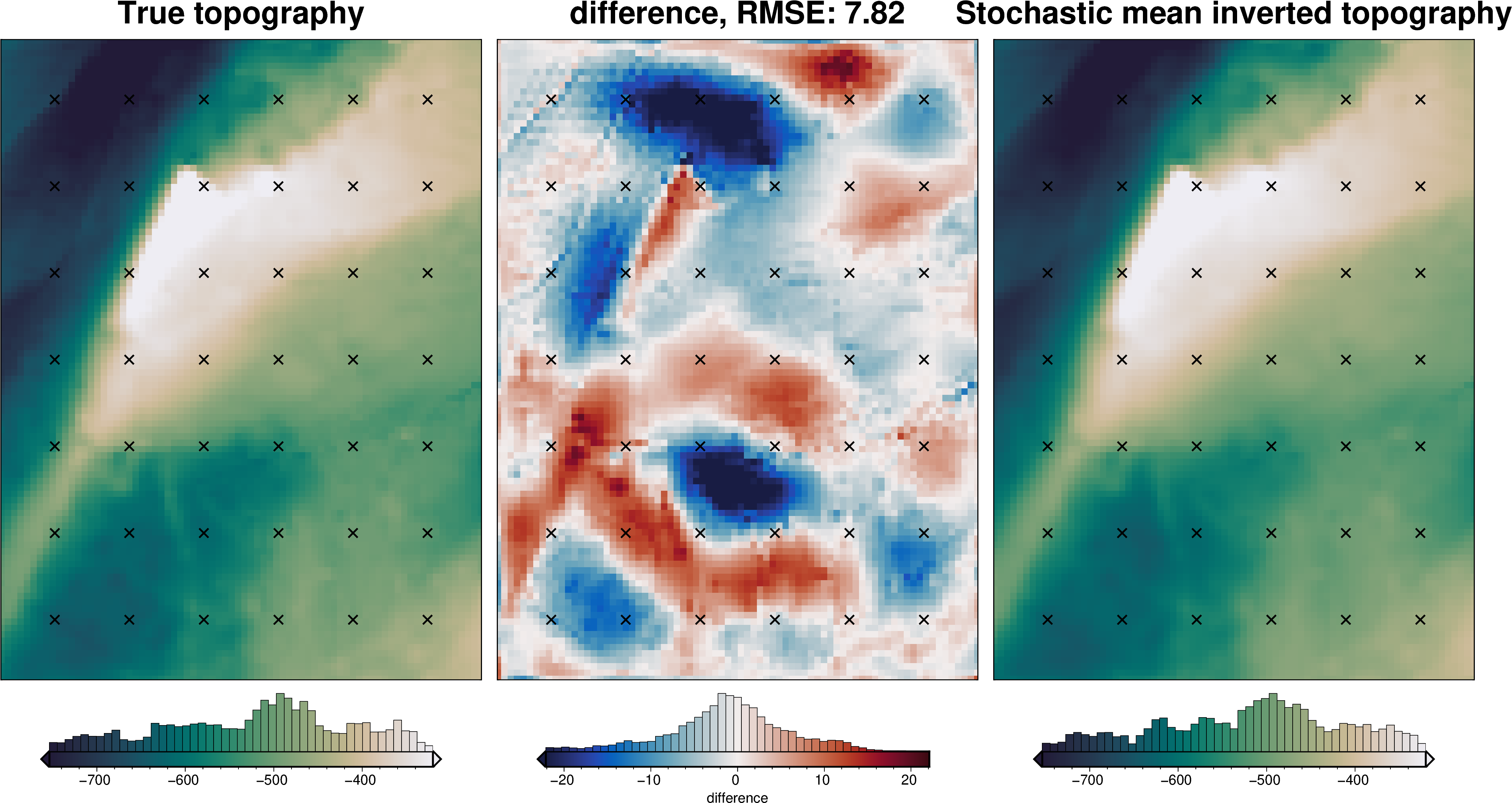

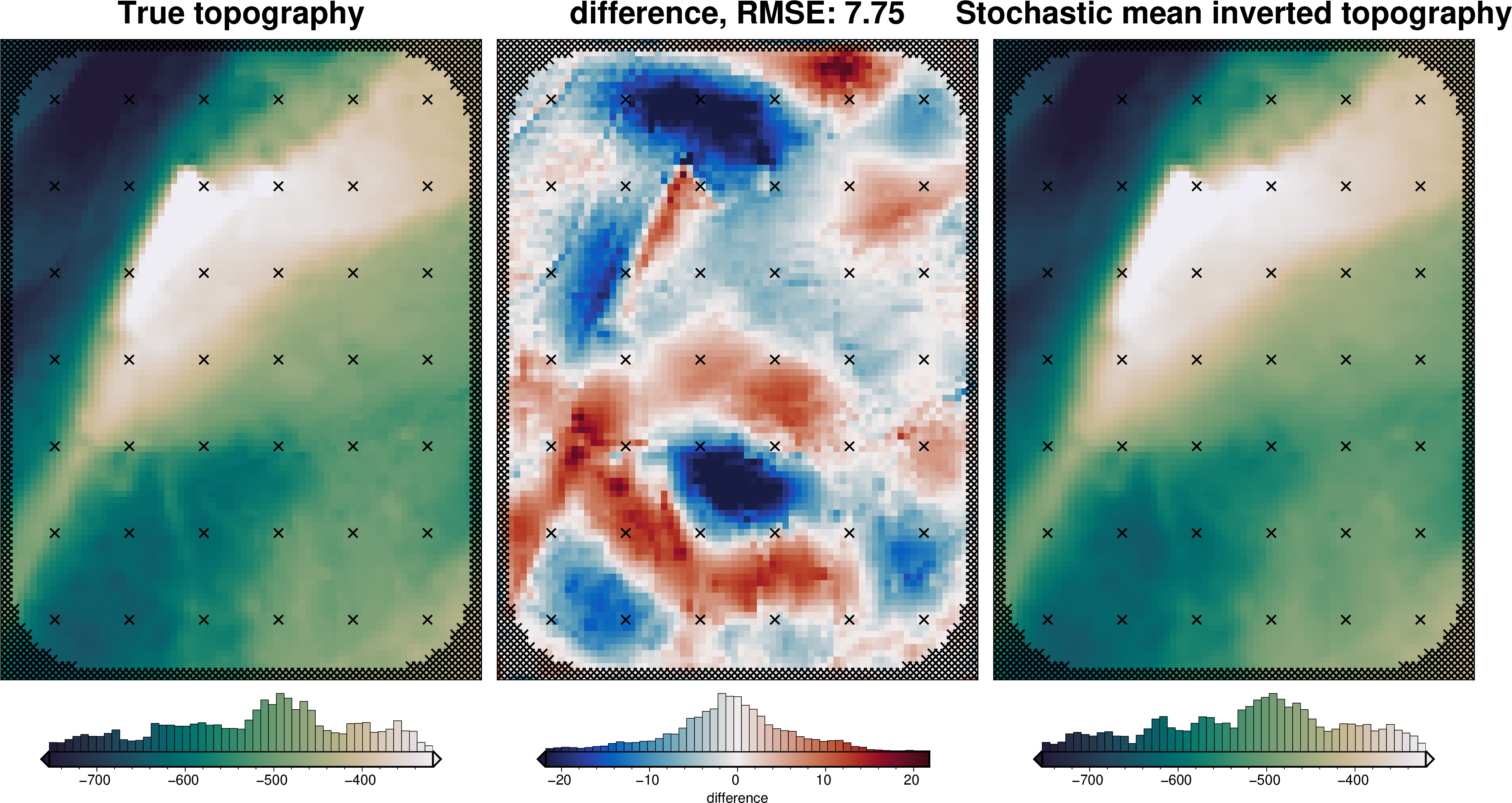

Comparing results¶

[57]:

results = [

uncert_results,

uncert_density_results,

uncert_damping_results,

]

# get cell-wise stats for each ensemble

stats = []

for r in results:

ds = uncertainty.merged_stats(

results=r,

plot=False,

constraints_df=constraint_points,

weight_by="constraints",

region=inversion_region,

)

stats.append(ds)

[58]:

names = [

"full",

"density",

"damping",

]

# get the standard deviation of the ensemble of ensembles

stdevs = []

for i, s in enumerate(stats):

stdevs.append(s.weighted_stdev.rename(f"{names[i]}_stdev"))

merged = xr.merge(stdevs)

merged

[58]:

<xarray.Dataset> Size: 186kB

Dimensions: (northing: 101, easting: 76)

Coordinates:

* northing (northing) float64 808B -1.6e+06 -1.598e+06 ... -1.4e+06

* easting (easting) float64 608B -4e+04 -3.8e+04 ... 1.08e+05 1.1e+05

Data variables:

full_stdev (northing, easting) float64 61kB 2.206 1.286 ... 1.54 1.362

density_stdev (northing, easting) float64 61kB 0.6075 0.5808 ... 0.8031

damping_stdev (northing, easting) float64 61kB 1.991 1.304 ... 0.9936[59]:

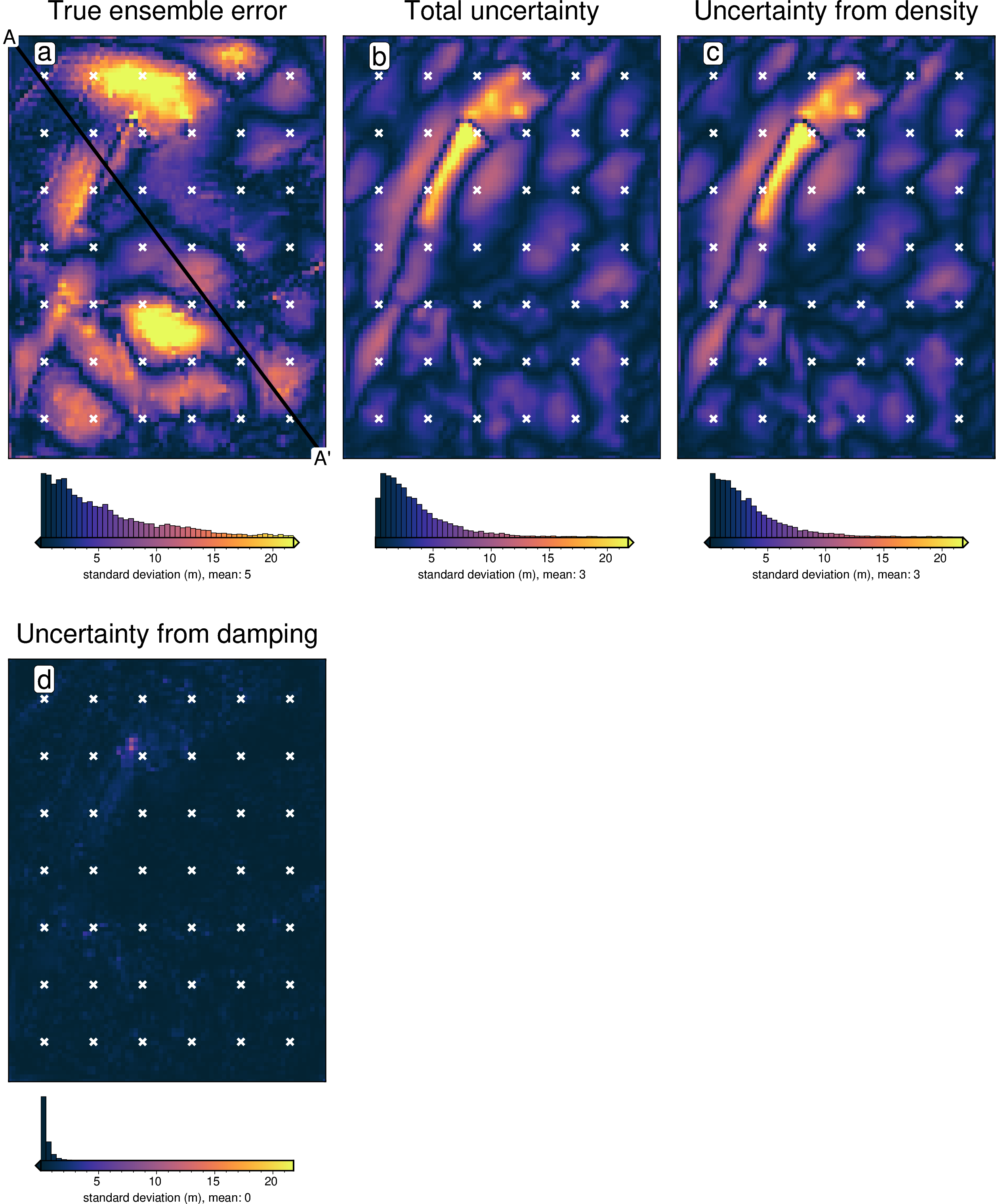

titles = [

"True ensemble error",

"Total uncertainty",

"Uncertainty from density",

"Uncertainty from damping",

]

grids = list(merged.data_vars.values())

grids.insert(0, np.abs(stats[0].weighted_mean - bathymetry))

cpt_lims = polar_utils.get_combined_min_max(grids, robust=True)

fig_height = 9

for i, g in enumerate(grids):

xshift_amount = 1

if i == 0:

fig = None

origin_shift = "initialize"

elif i == 3:

origin_shift = "both_shift"

xshift_amount = -2

else:

origin_shift = "xshift"

fig = maps.plot_grd(

grid=g,

fig_height=fig_height,

title=titles[i],

title_font="16p,Helvetica,black",

cmap="thermal",

cpt_lims=cpt_lims,

robust=True,

cbar_label=f"standard deviation (m), mean: {int(np.nanmean(g))}",

hist=True,

hist_bin_num=50,

fig=fig,

origin_shift=origin_shift,

xshift_amount=xshift_amount,

)

fig.plot(

x=constraint_points[constraint_points.inside].easting,

y=constraint_points[constraint_points.inside].northing,

style="x.2c",

fill="white",

pen="1.5p,white",

)

fig.text(

position="TL",

text=f"{string.ascii_lowercase[i]}",

fill="white",

pen=True,

font="16p,Helvetica,black",

offset="j.6/.2",

clearance="+tO",

no_clip=True,

)

if i == 0:

# plot profiles location, and endpoints on map

start = [inversion_region[0], inversion_region[3]]

stop = [inversion_region[1], inversion_region[2]]

fig.plot(

vd.line_coordinates(start, stop, size=100),

pen="2p,black",

)

fig.text(

x=start[0],

y=start[1],

text="A",

fill="white",

font="12p,Helvetica,black",

justify="CM",

clearance="+tO",

no_clip=True,

)

fig.text(

x=stop[0],

y=stop[1],

text="A' ",

fill="white",

font="12p,Helvetica,black",

justify="CM",

clearance="+tO",

no_clip=True,

)

fig.show()

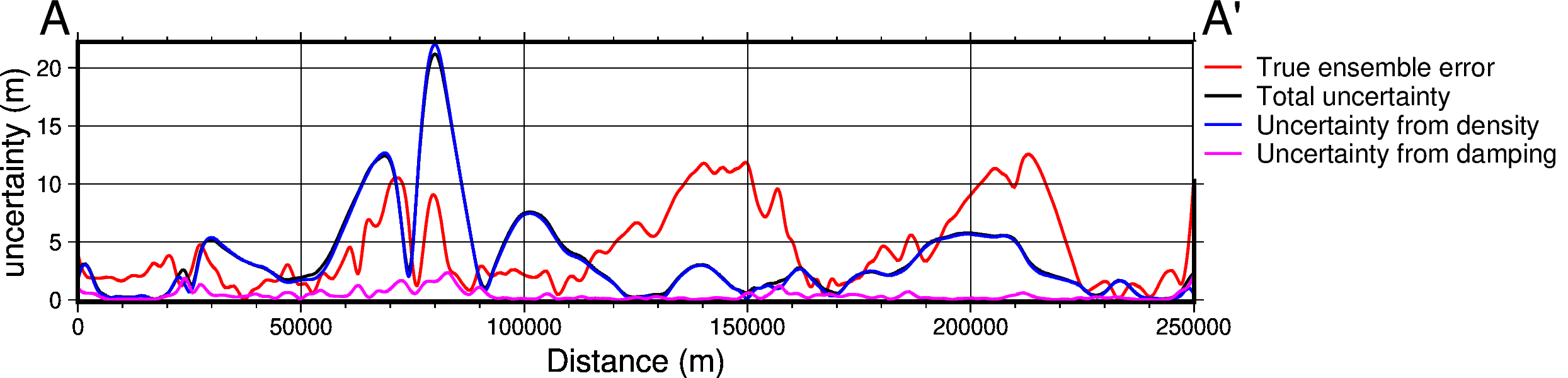

[60]:

data_dict = profiles.make_data_dict(

names=titles,

grids=grids,

colors=[

"red",

"black",

"blue",

"magenta",

"cyan",

"green",

"purple",

],

)

fig, df_data = profiles.plot_data(

"points",

start=[inversion_region[0], inversion_region[3]],

stop=[inversion_region[1], inversion_region[2]],

num=10000,

fig_height=4,

fig_width=15,

data_dict=data_dict,

data_legend_loc="jTR+jTL",

data_legend_box="+gwhite",

data_buffer=0.01,

data_frame=["neSW", "xafg+lDistance (m)", "yag+luncertainty (m)"],

share_yaxis=True,

start_label="A",

end_label="A' ",

)

fig.show()

grdtrack [WARNING]: Some input points were outside the grid domain(s).

grdtrack [WARNING]: Some input points were outside the grid domain(s).

grdtrack [WARNING]: Some input points were outside the grid domain(s).

grdtrack [WARNING]: Some input points were outside the grid domain(s).

[61]:

# save results

merged.to_netcdf(f"{fpath}_sensitivity.nc")

[62]:

stats_ds.to_netcdf(f"{fpath}_uncertainty.nc")

[ ]: